3.4: The Pauli Algebra

( \newcommand{\kernel}{\mathrm{null}\,}\)

3.4.1 Introduction

Let us consider the set of all 2×2 matrices with complex elements. The usual definitions of matrix addition and scalar multiplication by complex numbers establish this set as a four-dimensional vector space over the field of complex numbers V(4,C) With ordinary matrix multiplication, the vector space becomes, what is called an algebra, in the technical sense explained at the end of Section 2.3. The nature of matrix multiplication ensures that this algebra, to be denoted A2 is associative and noncommutative, properties which are in line with the group-theoretical applications we have in mind.

The name “Pauli algebra” stems, of course, from the fact that A2 was first introduced into physics by Pauli, to fit the electron spin into the formalism of quantum mechanics. Since that time the application of this technique has spread into most branches of physics.

From the point of view of mathematics, A2 is merely a special case of the algebra An of n×n matrices, whereby the latter are interpreted as transformations over a vector space V(n2,C). Their reduction to canonical forms is a beautiful part of modern linear algebra.

Whereas the mathematicians do not give special attention to the case n=2 the physicists, dealing with four-dimensional space-time, have every reason to do so, and it turns out to be most rewarding to develop procedures and proofs for the special case rather than refer to the general mathematical theorems. The technique for such a program has been developed some years ago.

The resulting formalism is closely related to the algebra of complex quaternions, and has been called accordingly a system of hypercomplex numbers. The study of the latter goes back to Hamilton, but the idea has been considerably developed in recent years. The suggestion that the matrices (1) are to be considered symbolically as generalizations of complex numbers which still retain “number-like” properties, is appealing, and we shall make occasional use of it. Yet it seems confining to make this into the central guiding principle. The use of matrices harmonizes better with the usual practice of physics and mathematics.

In the forthcoming systematic development of this program we shall evidently cover much ground that is well known, although some of the proofs and concepts of Whitney and Tisza do not seem to be used elsewhere. However, the main distinctive feature of the present approach is that we do not apply the formalism to physical theories assumed to be given, but develop the geometrical, kinematic and dynamic applications in close parallel with the building up of the formalism.

Since our discussion is meant to be self-contained and economical, we use references only sparingly. However, at a later stage we shall state whatever is necessary to ease the reading of the literature.

3.4.2 Basic Definitions and Procedures

We consider the set A2 of all 2×2 complex matrices

A=(a11a12a21a22)

Although one can generate A2 from the basis

e1=(1000)

e2=(0100)

e3=(0010)

e4=(0001)

in which case the matrix elements are the expansion coefficients, it is often more convenient to generate it from a basis formed by the Pauli matrices augmented by the unit matrix.

Accordingly A2 is called the Pauli algebra. The basis matrices are

σ0=I=(1001)

σ1=(0110)

σ2=(0−ii0)

σ3=(100−1)

The three Pauli matrices satisfy the well known multiplication rules

σ2j=1j=1,2,3

σjσk=−σkσj=iσljkl=123 or an even permutation thereof

All of the basis matrices are Hermitian, or self-adjoint:

σ†μ=σμμ=0,1,2,3

(By convention, Roman and Greek indices will run from one to three and from zero to three, respectively.)

We shall represent the matrix A of Equation ??? as a linear combination of the basis matrices with the coefficient of σμ denoted by aμ. We shall refer to the numbers aμ as the components of the matrix A. As can be inferred from the multiplication rules, Equation ??? , matrix components are obtained from matrix elements by means of the relation

aμ=12Tr(Aσμ)

where Tr stands for trace. In detail,

a0=12(a11+a22)

a1=12(a12+a21)

a2=12(a12−a21)

a3=12(a11−a22)

In practical applications we shall often see that a matrix is best represented in one context by its components, but in another by its elements. It is convenient to have full flexibility to choose at will between the two. A set of four components aμ, denoted by {aμ}, will often be broken into a complex scalar a0 and a complex “vector” {a1,a2,a3}=→a. Similarly, the basis matrices of A2 will be denoted by σ0=1 and {σ1,σ2,σ3}=→σ. With this notation,

A=∑μaμσμ=a01+→a⋅→σ

=(a0+a3a1−ia2a1+ia2a0−a3)

We associate with .each matrix the half trace and the determinant

12TrA=a0

|A|=a20−→a2

The extent to which these numbers specify the properties of the matrix A, will be apparent from the discussion of their invariance properties in the next two subsections. The positive square root of the determinant is in a way the norm of the matrix. Its nonvanishing: |A|≠0 is the criterion for A to be invertible.

Such matrices can be normalized to become unimodular:

A→|A|−1/2A

The case of singular matrices

|A|=a20−→a2=0

calls for comment. We call matrices for which |A|=0, but A≠0, null-matrices. Because of their occurrence, A2 is not a division algebra. This is in contrast, say, with the set of real quaternions which is a division algebra, since the norm vanishes only for the vanishing quaternion.

The fact that null-matrices are important,stems partly from the indefinite Minkowski metric. However, entirely different applications will be considered later.

We list now some practical rules for operations in A2, presenting them in terms of matrix components rather than the more familiar matrix elements.

To perform matrix multiplications we shall make use of a formula implied by the multiplication rules, Equation ???:

(→a⋅→σ)(→b⋅→σ)=→a⋅→bI+i(→a×→b)⋅→σ

where →a and →b are complex vectors.

Evidently, for any two matrices A and B

[A,B]=AB−BA=2i(→a×→b)⋅→σ

The matrices A and B commute, if and only if

→a×→b=0

that is, if the vector parts →a and →b are “parallel” or at least one of them vanishes.

In addition to the internal operations of addition and multiplication, there are external operations on A2 as a whole, which are analogous to complex conjugation. The latter operation is an involution, which means that (z∗)∗=z. Of the three involutions any two can be considered independent.

In A2 we have two independent involutions which can be applied jointly to yield a third:

A→A=a0I+→a⋅→σ

A→A†=a∗0I+→a∗⋅→σ

A→˜A=a0I−→a⋅→σ

A→˜A†=ˉA=a∗0I−→a∗⋅→σ

The matrix A† is the Hermitian adjoint of A. Unfortunately, there is neither an agreed symbol, nor a term for ˜A Whitney called it Pauli conjugate, other terms are quaternionic conjugate or hyper-conjugate A† (see Edwards, l.c.). Finally ˉA is called complex reflection.

It is easy to verify the rules

(AB)†=B†A†

(~AB)=˜B˜A

(¯AB)=ˉBˉA

According to Equation ??? the operation of complex reflection maintains the product relation in A2 it is an automorphism. In contrast, the Hermitian and Pauli conjugations are anti-automorphic.

It is noteworthy that the three operations ∼,t,−, together with the identity operator, form a group (the four-group, “Vierergruppe”). This is a mark of closure: we presumably left out no important operator on the algebra.

In various contexts any of the three conjugations appears as a generalization of ordinary complex conjugation.

Here are a few applications of the conjugation rules.

A˜A=(a20−→a2)1=|A|1

For invertible matrices

A−1=˜A|A|

For unimodular matrices we have the useful rule:

A−1=˜A

A Hermitian marrix A=A† has real components h0,→h. We define a matrix to be positive if it is Hermitian and has a positive trace and determinant:

h0>0,|H|=(h20−→h2)>0

If H is positive and unimodular, it can be parametrized as

H=cosh(μ/2)1+sinh(μ/2)ˆh⋅→σ=exp{(μ/2)ˆh⋅→σ}

The matrix exponential is defined by a power series that reduces to the trigonometric expression. The factor 1/2 appears only for convenience in the next subsection.

In the Pauli algebra, the usual definition U†=U−1 for a unitary matrix takes the form

u∗01+→u∗⋅→σ=|U|−1(u01−→u⋅→σ)

If U is also unimodular, then

u∗0=u0= real

→u∗=→u= imaginary

and

u20−→u⋅→u=u20+→u⋅→u∗=1

U=cos(ϕ/2)1−isin(ϕ/2)ˆu⋅→σ=exp(−i(ϕ/2)ˆu⋅→σ)

A unitary unimodular matrix can be represented also in terms of elements

U=(ξ0−ξ∗1ξ1ξ∗0)

with

|ξ0|2+|ξ1|2=1

where ξ0,ξ1 are the so-called Cayley-Klein parameters. We shall see that both this form, and the axis-angle representation, Equation ???, are useful in the proper context.

We turn now to the category of normal matrices N defined by the condition that they commute with their Hermitian adjoint: N†N=NN† Invoking the condition, Equation ??? , we have

→n×→n∗=0

implying that n∗ is proportional to n, that is all the components of →n must have the same phase. Normal matrices are thus of the form

N=n01+nˆn⋅→σ

where n0 and n n are complex constants and hatn is a real unit vector, which we call the axis of N. In particular, any unimodular normal matrix can be expressed as

N=cosh(κ/2)1+sinh(κ/2)ˆn⋅→σ=exp((κ/2)ˆn⋅→σ)

where κ=μ−iϕ,−∞<μ<∞,0≤ϕ<4π, and ˆn is a real unit vector. If ˆn points in the "3" direction, we have

N0=exp[(κ2)σ3]=(exp(κ2)00exp(−κ2))

Thus the matrix exponentials, Equations ???, ??? and ???, are generalizations of a diagonal matrix and the latter is distinguished by the requirement that the axis points in the z direction.

Clearly the normal matrix, Equation ???, is a commuting product of a positive matrix like Equation ??? with ˆh=ˆn and a unitary matrix like Equation ???, with ˆu=ˆn

N=HU=UH

The expressions in Equation ??? are called the polar forms of N, the name being chosen to suggest that the representation of N by H and U is analogous to the representation of a complex number z by a positive number r and a phase factor:

z=rexp(−iϕ/2)

We shall show that, more generally, any invertible matrix has two unique polar forms

A=HU=UH′

but only the polar forms of normal matrices display the following equivalent special features:

1. H and U commute

2. ˆh=ˆu=ˆn

3. H′=H

We see from the polar decomposition theorem that our emphasis on positive and unitary matrices is justified, since all matrices of A2 can be produced from such factors. We proceed now to prove the theorem expressed in Equation ??? by means of an explicit construction.

First we form the matrix AA†, which is positive by the criteria ???:

a0a∗0+→a⋅→a∗>0

|A||A†|>0

Let AA† be expressed in terms of an axis ˆh and the hyperbolic angle μ:

AA†=b(coshμ1+sinhμˆh⋅ˆσ)=bexp(μˆh⋅ˆσ)

where b is a positive constant. We claim that the Hermitian component of A is the positive square root of ???

H=(AA†)1/2=b1/2exp(μ2ˆh⋅ˆσ)

with

U=H−1A,A=HU

That U is indeed unitary is easily verified:

U†=A†H−1,U−1=A−1H

and these expressions are equal by Equation ???.

From Equation ??? we get

A=U(U−1HU)

and

A=UH′ with H′=U−1HU

It remains to be shown that the polar forms ??? are unique. Suppose indeed, that for a particular A we have two factorizations

A=HU=H1U1

then

AA†=H2=H21

But, since AA† has a unique positive square root, H1=H, and

U=H−11A=H−1A=U q.e.d.

Polar forms are well known to exist for any n×n matrix, although proofs of uniqueness are generally formulated for abstract transformations rather than for matrices, and require that the transformations be invertable.

3.4.3 The restricted Lorentz group

Having completed the classification of the matrices of A2, we are ready to interpret them as operators and establish a connection with the Lorentz group. The straightforward procedure would be to introduce a 2-dimensional complex vector space V(∈,C). By using the familiar bra-ket formalism we write

A|ξ⟩=|ξ′⟩

A†⟨ξ|=⟨ξ′|

The two-component complex vectors are commonly called spinors. We shall study their properties in detail in Section 5. The reason for this delay is that the physical interpretation of spinors is a

subtle problem with many ramifications. One is well advised to consider at first situations in which the object to be operated upon can be represented by a 2 × 2 matrix.

The obvious choice is to consider Hermitian matrices, the components of which are interpreted as relativistic four-vectors. The connection between four-vectors and matrices is so close that it is often convenient to use the same symbol for both:

A=a01+→a⋅→σ

A={a0,→a}

We have

a20−→a2=|A|=12Tr(AˉA)

or more generally

a0b0−→a⋅→b=12Tr(AˉB)

A Lorentz transformation is defined as a linear transformation

{a0,→a}=L{a′0,→a′}

that leaves the expression ??? and hence also ??? invariant. We require moreover that the sign of the “time component” a0 be invariant (orthochronic Lorentz transformation L↑) and that the determinant of the 4x4 matrix L be positive (proper Lorentz transformation L+). If both conditions are satisfied, we speak of the restricted Lorentz group L↑+. This is the only one to be of current interest for us, and until further notice “Lorentz group” is to be interpreted in this restricted sense.

Note that A can be interpreted as any of the four-vectors discussed in Section 3.2: R={r,→r}

K={k0,→k},P={p0,→p}

Although these vectors and their matrix equivalents have identical transformation properties, they differ in the possible range of their determinants. A negative |P| can arise only for an unphysical imaginary rest mass. By contrast, a positive R corresponds to a time-like displacement pointing toward the future, an R with a negative |R| to a space-like displacement and |R|=0 is associated with the light cone. For the wave vector we have by definition |K|=0.

To describe a Lorentz transformation in the Pauli algebra we try the “ansatz”

A′=VAW

with |V|=|W|=1 in order to preserve |A|. Reality of the vector, i.e., hermiticity of the matrix A is preserved if the additional condition W=V† is satisfied. Thus the transformation

A′=VAV†

leaves expression ??? invariant. It is easy to show that ??? is invariant as well.

The complex reflection ˉA transforms as

¯A′=ˉVˉA˜V

and the product of two four-vectors:

(AˉB)′=VAV†ˉVˉB˜V=V(AˉB)V−1

This is a so-called similarity transformation. By taking the trace of Equation ??? we confirm that the inner product ??? is invariant under ???. We have to remember that a cyclic permutation does not affect the trace of a product of matrices. Thus Equation ??? indeed induces a Lorentz transformation in the four-vector space of A.

It is well known that the converse statement is also true: to every transformation of the restricted Lorentz group L↑+ there are associated two matrices differing-only by sign (their parameters ϕ differ by 2π)) in such a fashion as to constitute a two-to-one homomorphism between the group of unimodular matrices SL(2,C) and the group L↑+. It is said also that SL(2,C) provides a two-valued representation of L↑+. We shall prove this statement by demonstrating explicitly the connection between the matrices V and the induced, or associated group operations.

We note first that A and ˉA correspond in the tensor language to the contravariant and the covariant representation of a vector. We illustrate the use of the formalism by giving an explicit form for the inverse of ???

A=V−1A′V†−1≡˜VA′ˉV

We invoke the polar decomposition theorem Equation ??? of Section ??? and note that it is sufficient to establish this connection for unitary and positive matrices respectively.

Consider at first

A′=UAU†≡UAU−1

with

U(ˆu,ϕ2)≡exp(−iϕ2ˆu⋅→σ)u21+u22+u23=1,0≤ϕ<4π

The set of all unitary unimodular matrices described by Equation ??? form a group that is commonly called SU(2).

Let us decompose →a:

→a=→a‖

\vec{a}_{\|}=(\vec{a} \cdot \hat{u}) \hat{u}, \quad \vec{a}_{\perp}=\vec{a}-\vec{a}_{\|}=\hat{u} \times(\vec{a} \times \hat{u})\label{78}

It is easy to see that Equation \ref{75} leaves a_{0} \text { and } a_{\|} invariant and induces a rotation around \hat{u} by an angle \phi: R\{\hat{u}, \phi\}.

Conversely, to every rotation R\{\hat{u}, \phi\} there correspond two matrices:

U\left(\hat{u}, \frac{\phi}{2}\right) \quad \text { and } \quad U\left(\hat{u}, \frac{\phi+2 \pi}{2}\right)=-U\left(\hat{u}, \frac{\phi}{2}\right)\label{79}

We have 1 \rightarrow 2 homomorphism between \mathcal{S O}(3) \text { and } \mathcal{S} \mathcal{U}(2), the latter is said to be a two-valued representation of the former. By establishing this correspondence we have solved the problem of parametrization formulated on page 13. The nine parameters of the orthogonal 3 × 3 matrices are reduced to the three independent ones of U\left(\hat{u}, \frac{\phi}{2}\right). Moreover we have the simple result

U^{n}=\exp \left(-\frac{i n \phi}{2} \hat{u} \cdot \vec{\sigma}\right)\label{80}

which reduces to the de Moivre theorem if \hat{n} \cdot \vec{\sigma}=\sigma_{3}

Some comment is in order concerning the two-valuedness of the \mathcal{S U}(2) representation. This comes about because of the use of half angles in the algebraic formalism which is deeply rooted in the geometrical structure of the rotation group. (See the Rodrigues-Hamilton theorem in Section 2.2.)

Whereas the two-valuedness of the \mathcal{S U}(2) representation does not affect the transformation of the A vector based on the bilateral expression \ref{75}, the situation will be seen to be different in the spinorial theory based on Equation \ref{62}, since under certain conditions the sign of the spinor |\xi\rangle is physically meaningful.

The above discussion of the rotation group is incomplete even within the classical theory. The rotation R\{\hat{u}, \phi\} leaves vectors along \hat{u} unaffected. A more appropriate object to be rotated is the Cartesian triad, to be discussed in Section 5.

We consider now the case of a positive matrix V=H

A^{\prime}=H A H\label{81}

with

H=\exp \left(\frac{\mu}{2} \hat{h} \cdot \sigma\right)\label{82}

h_{1}^{2}+h_{2}^{2}+h_{3}^{2}=1, \quad-\infty<\mu<\infty\label{83}

We decompose \vec{a} as

\vec{a}=a \hat{h}+\vec{a}_{\perp}\label{84}

and using the fact that (\vec{a} \cdot \vec{\sigma}) \text { and }(\vec{b} \cdot \vec{\sigma}) \text { commute for } \vec{a} \| \vec{b} and anticommute for \vec{a} \perp \vec{b}, we obtain

A^{\prime}=\exp \left(\frac{\mu}{2} \hat{h} \cdot \sigma\right)\left(a_{0} 1+a \hat{h} \cdot \sigma+\vec{a}_{\perp} \cdot \sigma\right) \exp \left(\frac{\mu}{2} \hat{h} \cdot \sigma\right)\label{85}

=\exp (\mu \hat{h} \cdot \sigma)\left(a_{0} 1+\vec{a} \hat{h} \cdot \sigma\right)+\vec{a}_{\perp} \cdot \sigma\label{86}

Hence

a_{0}^{\prime}=\cosh \mu a_{0}+\sinh \mu a\label{87}

a^{\prime}=\sinh \mu a_{0}+\cosh \mu a_{0}\label{88}

\vec{a}_{\perp}^{\prime}=\vec{a}_{\perp}\label{89}

This is to be compared with Table 3.1, but remember that we have shifted from the passive to the active interpretation, from alias to alibi.

Positive matrices with a common axis form a group (Wigner’s “little group”), but in general the product of Hermitian matrices with different axes are not Hermitian. There arises a unitary factor, which is the mathematical basis for the famous Thomas precession.

Let us consider now a normal matrix

V=N=H\left(\hat{k}, \frac{\mu}{2}\right) U\left(\hat{k}, \frac{\phi}{2}\right)=\exp \left(\frac{\mu-i \phi}{2} \hat{n} \cdot \sigma\right)\label{90}

where we have the commuting product of a rotation and a Lorentz transformation with the same axis \hat{n}. Such a constellation is called a Lorentz 4-screw

An arbitrary sequence of pure Lorentz transformations and pure rotations is associated with a pair of matrices V and −V, which in the general case is of the form

H\left(\hat{h}, \frac{\mu}{2}\right) U\left(\hat{u}, \frac{\phi}{2}\right)=U\left(\hat{u}, \frac{\phi}{2}\right) H^{\prime}\left(\hat{h}^{\prime}, \frac{\mu}{2}\right)\label{91}

According to Equation \ref{58} of Section \ref{2}, H \text { and } H^{\prime} are connected by a similarity transformation, which does not affect the angle \mu but only the axis of the transformation. (See the next section.)

This matrix depends on the 6 parameters, \hat{h}, \mu, \hat{u}, \phi and thus we have solved the general problem of parametrization mentioned above.

For a normal matrix \hat{h}=\hat{u}=\hat{n} and the number of parameters is reduced to 4.

Our formalism enables us to give a closed form for two arbitrary normal matrices and the corresponding 4-screws.

\left[N, N^{\prime}\right]=2 i \sinh \frac{\kappa}{2} \sinh \frac{\kappa}{2}\left(\hat{n} \times \hat{n}^{\prime}\right) \cdot \vec{\sigma}\label{92}

where \kappa=\mu-i \phi, \kappa^{\prime}=\mu^{\prime}-i \phi^{\prime}

In the literature the commutation relations are usually given in terms of infinitesimal operators which are defined as follows:

U\left(\hat{u}_{k}, \frac{d \phi}{2}\right)=1-\frac{i}{2} d \phi \sigma_{k}=1+d \phi I_{k}\label{93}

I_{k}=-\frac{i}{2} \sigma_{k}\label{94}

H\left(\hat{h}_{k}, \frac{d \mu}{2}\right)=1+\frac{d \mu}{2} \sigma_{k}=1+d \mu L_{k}\label{95}

L_{k}=\frac{1}{2} \sigma_{k}\label{96}

The commutation relations are

\left[I_{1}, I_{2}\right]=I_{3}\label{97}

\left[L_{1}, L_{2}\right]=-I_{3}\label{98}

\left[L_{1}, I_{2}\right]=L_{3}\label{99}

and cyclic permutations.

It is a well known result of the Lie-Cartan theory of continuous group that these infinitesimalgenerators determine the entire group. Since we have represented these generators in \mathcal{S} \mathcal{L}(2, C), we have completed the demonstration that the entire group L_{+}^{\uparrow} is accounted for in our formalism.

3.4.4 Similarity classes and canonical forms of active transformations

It is evident that a Lorentz transformation induced by a matrix H\left(\hat{h}, \frac{\mu}{2}\right) assumes a particularly simple form if the z-axis of the coordinate system is placed in the direction of \hat{h}. The diagonal matrix H\left(\hat{z}, \frac{\mu}{2}\right) is said to be the canonical form of the transformation. This statement is a special case of the problem of canonical forms of linear transformations, an important chapter in linear algebra.

Let us consider a linear mapping in a vector space. A particular choice of basis leads to a matrix representation of the mapping, and representations associated with different frames are connected by similarity transformations. Let A_{1} be an arbitrary and S an invertible matrix. A similarity transformation is effected on A, by

A_{2}=S A_{1} S^{-1}\label{100}

Matrices related by similarity transformation are called similar, and matrices similar to each other constitute a similarity class.

In usual practice the mapping-refers to a vector space as in Equation \ref{62} of Section 3.4.3:

A_{1}|\xi\rangle_{1}=\left|\xi^{\prime}\right\rangle_{1}\label{101}

The subscript refers to the basis “1.” A change of basis \Sigma_{1} \rightarrow \Sigma_{2} is expressed as

|\xi\rangle_{2}=S|\xi\rangle_{1}, \quad\left|\xi^{\prime}\right\rangle_{2}=S\left|\xi^{\prime}\right\rangle_{1}\label{102}

Inserting into Equation \ref{101} we obtain

A_{1} S^{-1}|\xi\rangle_{2}=S^{-1}|\xi\rangle_{2}\label{103}

and hence

A_{2}|\xi\rangle_{2}=|\xi\rangle_{2}\label{104}

where A_{2} is indeed given by Equation \ref{100}.

The procedure we have followed thus far to represent Lorentz transformations in A_{2} does not quite follow this standard pattern.

We have been considering mappings of the space of fourvectors which in turn were represented as 2 × 2 complex matrices. Thus both operators and operands are matrices of A_{2}. In spite of this difference in interpretation, the matrix representations in different frames are still related according to Equation \label{100}.

This can be shown as follows. Consider a unimodular matrix A, that induces a Lorentz transformation in P-space, whereby the matrices refer to the basis \Sigma_{1}:

P_{1}^{\prime}=A_{1} P_{1} A_{1}^{\dagger}\label{105}

We interpret Equation \ref{105} in the active sense as a linear mapping of P-space on itself that corresponds physically to some dynamic process that alters P in a linear way.

We shall see in Section 4 that the Lorentz force acting on a charged particle during the time dt can be indeed considered as an active Lorentz transformation. (See also page 26.)

The process has a physical meaning independent of the frame of the observer, but the matrix representations of P, P^{\prime} and of A depend on the frame. The four-momenta in the two frames are connected by a Lorentz transformation interpreted in the passive sense:

P_{2}=S P_{1} S^{\dagger}\label{106}

P_{2}=S P_{1}^{\prime} S^{\dagger}\label{107}

with |S|=1. Solving for P, P^{\prime} and inserting into Equation \ref{105}, we obtain

S^{-1} P_{2}^{\prime} \tilde{S}^{\dagger}=A_{1} S^{-1} P_{2} \tilde{S}^{\dagger} A_{1}^{\dagger} S\label{108}

or

P_{2}^{\prime}=A_{2} P_{2} A_{1}^{\dagger}\label{109}

where A_{2} and A_{1} are again connected by the similarity transformation \ref{100}.

We may apply the polar decomposition theorem to the matrix S. In the special case that S is unitary, we speak of a unitary similarity transformation corresponding to the rotation of the coordinate system discussed at the onset of this section. However, the general case will lead us to less obvious physical applications.

The above considerations provide sufficient motivation to examine the similarity classes of A_{2}. We shall see that all of them have physical applications, although the interpretation of singular mappings will be discussed only later

The similarity classes can be characterized in several convenient ways. For example, one may use two independent similarity invariants shared by all the matrices A=a_{0} l+\vec{a} \cdot \vec{\sigma} in the class. We shall find it convenient to choose

1. the determinant |A|, and

2. the quantity \vec{a}^{2}

The trace is also a similarity invariant, but it is not independent: a_{0}^{2}=|A|+\vec{a}^{2}

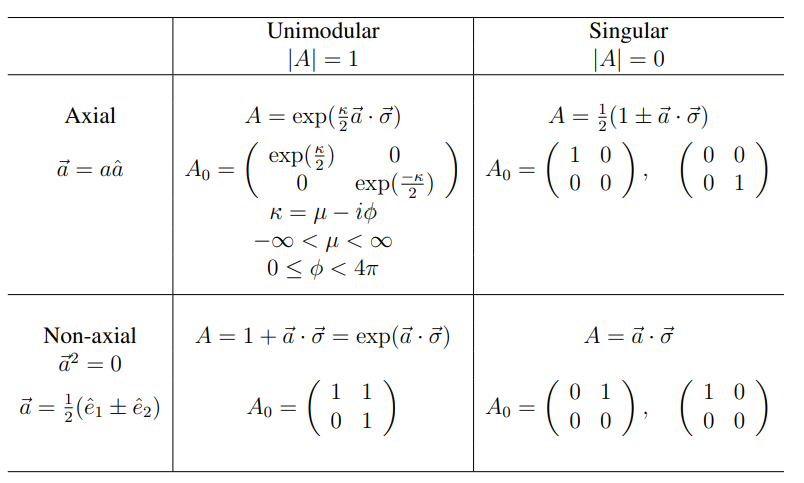

Alternatively, one can characterize the whole class by one representative member of it, some matrix A_{0} called the canonical form for the class (See Table 3.2).

We proceed at first to characterize the similarity classes in terms of the invariants 1 and 2. We recall that a matrix A is invertible if |A| \neq 0 and singular if |A|=0. Without significant loss of generality, we can normalize the invertible matrices of A_{2} to be unimodular, so that we need discuss only classes of singular and of unimodular matrices. As a second invariant to characterize a class, we choose \vec{a} \cdot \vec{a} and we say that a matrix A is axial if \vec{a} \cdot \vec{a} \neq 0. In this case, there exists a unit vector \hat{a} (possibly complex) such that \vec{a}=a \cdot \hat{a} where a is a complex constant. The unit vector \hat{a} is called the axis of A. Conversely, the matrix A is non-axial if \vec{a} \cdot \vec{a}=0, the vector \vec{a} is called isotropic or a null-vector, it cannot be expressed in terms of an axis.

The concept of axis as here defined is the generalization of the real axis introduced in connection with normal matrices on page 33. The usefulness of this concept is apparent from the following theorem:

Theorem 1. For any two unit vectors \hat{v}_{1}, \text { and } \hat{v}_{2}, real or complex, there exists a matrix S such that

\hat{v}_{2} \cdot \vec{\sigma}=S \hat{v}_{1} \cdot \vec{\sigma} S^{1}\label{110}

Proof. We construct one such matrix S from the following considerations. If \hat{v}_{1}, \text { and } \hat{v}_{2} are real, then let S be the unitary matrix that rotates every vector by an angle \pi about an axis which bisects the angle between \hat{v}_{1}, \text { and } \hat{v}_{2}:

S=-i \hat{s} \cdot \vec{\sigma}\label{111}

where

\hat{s}=\frac{\hat{v}_{1}+\hat{v}_{2}}{\sqrt{2 \hat{v}_{1} \cdot \hat{v}_{2}+2}}\label{112}

Even if \hat{v}_{1}, \text { and } \hat{v}_{2} are not real, it is easily verified that S as given formally by Equations \ref{111} and \ref{112}, does indeed send \hat{v}_{1} \text { to } \hat{v}_{2}. Naturally S is not unique; for instance, any matrix of the form

S=\exp \left\{\left(\frac{\mu_{2}}{2}-i \frac{\phi_{2}}{2}\right) \vec{v}_{2} \cdot \vec{\sigma}\right\}(-i \hat{s} \cdot \vec{\sigma}) \exp \left\{\left(\frac{\mu_{1}}{2}-i \frac{\phi_{1}}{2}\right) \vec{v}_{1} \cdot \vec{\sigma}\right\}\label{113}

will send \hat{v}_{1} \text { to } \hat{v}_{2}

This construction fails only if

\hat{v}_{1} \cdot \hat{v}_{2}+1=0\label{114}

that is for the transformation \hat{v}_{1} \rightarrow-\hat{v}_{2}. In this trivial case we choose

S=-i \hat{s} \cdot \vec{\sigma}, \quad \text { where } \quad \hat{s} \perp \vec{v}_{1}\label{115}

Since in the Pauli algebra diagonal matrices are characterized by the fact that their axis is \hat{x}_{3}, we have proved the following theorem:

Theorem 2. All axial matrices are diagonizable, but normal matrices and only normal matrices are diagonizable by a unitary similarity transformation.

The diagonal forms are easily ascertained both for singular and the unimodular cases. (See Table 3.2.) Because of their simplicity they are called also canonical forms. Note that they can be multiplied by any complex number in order to get all of the axial matrices of \mathcal{A}_{2}

The situation is now entirely clear: the canonical forms show the nature of the mapping; a unitary similarity transformation merely changes the geometrical orientation of the axis. The angle of circular and hyperbolic rotation specified by a_{0} is invariant. A general transformation complexifies the axis. This situation comes about if in the polar form of the matrix A = HU, the factors have distinct real axes, and hence do not commute.

There remains to deal with the case of nonaxial matrices. Consider A=\vec{a} \cdot \vec{\sigma} \text { with } \vec{a}^{2}=0. Let us decompose the isotropic vector \vec{a} into real and imaginary parts:

\vec{a}=\vec{\alpha}+i \vec{\beta}\label{116}

Hence \vec{\alpha}^{2}-\vec{\beta}^{2}=0 \text { and } \alpha \cdot \beta=0. Since the real and the imaginary parts of a are perpendicular, we can rotate these directions by a unitary similarity transformation into the x- and y-directions respectively. The transformed matrix is

\frac{\alpha}{2}\left(\sigma_{1}+i \sigma_{2}\right)=\left(\begin{array}{ll} 0 & \alpha \\ 0 & 0 \end{array}\right)\label{117}

with a positive. A further similarity transformation with

S=\left(\begin{array}{cc} \alpha^{-1 / 2} & 0 \\ 0 & \alpha^{1 / 2} \end{array}\right)\label{118}

transforms Equation \ref{117} into the canonical form given in Table 3.2.

As we have seen in Section 3.4.3 all unimodular matrices induce Lorentz transformations in Minkowski, or four-momentum space. According to the results summarized in Table 3.2, the mappings induced by axial matrices can be brought by similarity transformations into so-called Lorentz four-screws consisting of a circular and hyperbolic rotation around the same axis, or in other words: a rotation around an axis, and a boost along the same axis.

What about the Lorentz transformation induced by a nonaxial matrix? The nature of these transformations is very different from the common case, and constitutes an unusual limiting situation. It is justified to call it an exceptional Lorentz transformation. The special status of these transformations was recognized by Wigner in his fundamental paper on the representations of the Lorentz group.

The present approach is much more elementary than Wigner’s, both from the point of view of mathematical technique, and also the purpose in mind. Wigner uses the standard algebraic technique of elementary divisors to establish the canonical Jordan form of matrices. We use, instead a specialized technique adapted to the very simple situation in the Pauli algebra. More important, Wigner was concerned with the problem of representations of the inhomogeneous Lorentz group, whereas we consider the much simpler problem of the group structure itself, mainly in view of application to the electromagnetic theory.

The intuitive meaning of the exceptional transformations is best recognized from the polar form of the generating matrix. This can be carried out by direct application of the method discussed at the end of the last section. It is more instructive, however, to express the solution in terms of (circular and hyperbolic) trigonometry.

We ask for the conditions the polar factors have to satisfy in order that the relation

1+\hat{a} \cdot \vec{\sigma}=H\left(\hat{h}, \frac{\mu}{2}\right) U\left(\hat{u}, \frac{\phi}{2}\right)\label{119}

should hold with \mu \neq 0, \phi \neq 0. Since all matrices are unimodular, it is sufficient to consider the equality of the traces:

\frac{1}{2} \operatorname{Tr} A=\cosh \left(\frac{\mu}{2}\right) \cos \left(\frac{\phi}{2}\right)-i \sinh \left(\frac{\mu}{2}\right) \sin \left(\frac{\phi}{2}\right) \hat{h} \cdot \hat{u}=1\label{120}

This condition is satisfied if and only if

\hat{h} \cdot \hat{u}=0\label{121}

and

\cosh \left(\frac{\mu}{2}\right) \cos \left(\frac{\phi}{2}\right)=1\label{122}

The axes of circular and hyperbolic rotation are thus perpendicular, to each other and the angles of these rotations are related in a unique fashion: half of the circular angle is the so-called Gudermannian function of half of the hyperbolic angle

\frac{\phi}{2}=g d\left(\frac{\mu}{2}\right)\label{123}

However, if \mu \text { and } \phi are infinitesimal, we get

\left(1+\frac{\mu^{2}}{2}+\ldots\right)\left(1+\frac{\phi^{2}}{2}+\ldots\right)=1, \text { i.e. }\label{124}

\mu^{2}-\phi^{2}=0\label{125}

We note finally that products of exceptional matrices need not be exceptional, hence exceptional Lorentz transformations do not form a group.

In spite of their special character, the exceptional matrices have interesting physical applications, both in connection with the electromagnetic field as discussed in Section 4, and also for the construction of representations of the inhomogeous Lorentz group [Pae69, Wig39].

We conclude by noting that the canonical forms of Table 3.2 lend themselves to express the powers A_{0}^{k} in simple form.

For the axial singular matrix we have

A_{0}^{2}=A\label{126}

These projection matrices are called idempotent. The nonaxial singular matrices are nilpotent:

A_{0}^{2}=0\label{127}

The exceptional matrices (unimodular nonaxial) are raised to any power k (even non-real) by the formula

A^{k}=1^{k}(1+k \vec{a} \cdot \vec{\sigma})\label{128}

=1^{k} \exp (k \vec{a} \cdot \vec{\sigma})\label{129}

For integer k, the factor 1^{k} becomes unity. The axial unimodular case is handled by formulas that are generalizations of the well known de Moivre formulas:

A^{k}=1^{k} \exp \left(k \frac{\kappa}{2}+k l 2 \pi i\right)\label{130}

where l is an integer. For integer k, Equation \ref{130} reduces to

A^{k}=\exp \left(k\left(\frac{\kappa}{2}\right) \vec{a} \cdot \vec{\sigma}\right)\label{131}

In connection with these formulae, we note that for positive A(\phi=0 and a real, there is a unique positive \mathrm{m}^{t h} root of A:

A=\exp \left\{\left(\frac{\mu}{2}\right) \hat{a} \cdot \vec{\sigma}\right\}\label{132}

A^{1 / m}=\exp \left\{\left(\frac{\mu}{2 m}\right) \hat{a} \cdot \vec{\sigma}\right\}\label{133}

The foregoing results are summarized in Table 3.2.

Table 3.2: Canonical Forms for the Simlarity classes of A_{2}