1.28: Solving the Wave Equation with Fourier Transforms

( \newcommand{\kernel}{\mathrm{null}\,}\)

[** This chapter is under construction **]

In the next chapter we will introduce the wave equation due to its importance in understanding the dynamics of the primordial plasma. In one dimension the wave equation can be written as

∂2Ψ(x,t)∂t2=v2∂2Ψ(x,t)∂x2.

We will leave a discussion of the physics of this equation and the primordial plasma to the next chapter. Here, we will focus on the use of Fourier methods to solve for the evolution of Ψ(x,t) assuming it obeys the above equation and that we are given the value of Ψ and its time derivative at some initial time for all values of x. Fourier methods have a broad range of applications in physics. They have utility well beyond the dynamics of the wave equation in both experimental and theoretical physics. For the student of physics, time spent developing facility with Fourier transforms is time well spent.

Let's see what happens with an ansatz of the form

Ψ(x,t)=A(t)cos(kx);

i.e., let's assume the wave has a fixed spatial pattern of a cosine of wavelength λ/(2π), with an amplitude that varies with time.

Plugging this ansatz in to Eq. ??? we find that it is a solution of Eq. ??? as long as

¨A(t)=−v2k2A(t);

i.e., as long as A(t) obeys a harmonic oscillator equation.

Box: do the above plugging in to arrive at Eq. ???.

Do the above "plugging in" to arrive at Eq. ???

- Answer

-

TBD

The general solution to Eq. ??? is

A(t)=αcos(kvt)+βsin(kvt).

The constants α and β can be determined from initial conditions A(0) and ˙A(0).

Because it will be helpful to see a specific solution, let's assume the ansatz Eq. ???, set k=2π/Mpc, A(0)=1 and ˙A(0)=0. Note that this means we have a wave of wavelength 1 Mpc that starts off at rest with unit amplitude. One can show that in this case α=1,β=0, and the solution for Ψ is therefore

Ψ(x,t)=cos(kvt)cos(kx)

where k=2π/Mpc. This solution is graphed in the following animation:

Check that Eq. ??? satisfies the wave equation and is consistent with the given initial conditions.

- Answer

-

TBD

We've seen a specific solution to the wave equation. We're now going to work our way toward a completely general solution, and a nifty solution method that relies on the properties we've just seen above for how a cosine (or sine, as we'll see) spatial pattern evolves over time.

Our general solution method will exploit the fact that any some of two solutions to the wave equation is itself a solution to the wave equation.

Show that if Ψ1(x,t) and Ψ2(x,t) are both solutions of Eq. ??? then Ψ1(x,t)+Ψ2(x,t) is a solution also.

- Answer

-

TBD

Let's now introduce another particular solution to the wave equation, which we will need for the general solutions, and that is:

Ψ(x,t)=B(t)sin(kx).

Show that Eq. ??? is indeed a solution of Eq. ??? as long as ¨B=−k2v2B.

- Answer

-

TBD

We are now ready to present the broad outlines of a solution strategy that takes advantage of the fact that any function of x can be written as a sum over cosines and sines of various wavelengths (an assertion that we will discuss more below). The basic idea is that the amplitudes of these sines and cosines will obey a HO equation, and so their time evolution is simple. The general solution is thus a sum over cosines and sines, each with their individual amplitude evolving harmonically at its particular rate.

To be more explicit, here, qualitatively, are the steps:

1) We can write any Ψ(x,t) as a sum over cosines and sines with different wavelengths (and hence different values of k):

Ψ(x,t)=A1(t)cos(k1x)+B1(t)sin(k1x)+A2(t)cos(k2x)+B2(t)sin(k2x)+....

2) If Ψ(x,t) obeys the wave equation then each of the time-dependent amplitudes obeys their own harmonic oscillator equation

¨An(t)=−k2nv2A(t) and ¨Bn(t)=−k2nv2B(t).

3) These equations for the amplitudes are easy to solve , and their solutions are completely independent of one another: how A3(t) evolves has no impact on how B2(t) evolves, for example. (Note that this is kind of amazing because they are both waves in the same medium at the same time and location.)

4) With the time evolution of the amplitudes determined (using the given initial conditions), we can just plug those into Eq. ??? to get the solution.

One thing we have not told you yet is how one, in practice, actually writes out the terms on the right-hand side of Eq. ???. For example, how does one know what values of k1 are needed? Also, how does one get the needed initial conditions for the An and Bn? We'll get to that, but for now let's look at an example of this solution method at work.

Let's work out how Ψ(x,t) will evolve if it starts off as a triangle wave at rest. Let's assume the triangle wave has a wavelength of 1 Mpc, initially has an amplitude of unity, is initially at rest (˙Ψ(x,0)=0) and is phased so that it is zero at the origin (Ψ(0,0)=0). Let's further assume it obeys the wave equation with speed v; i.e. Eq. ???.

We will state without proof here (but the proof is not difficult; see Wolfram Alpha) that the initial configuration Ψ(x,0) can be written as

Ψ(x,0)=Σ∞n=1(An(0)cos(knx)+Bn(0)sin(knx))

with An(0)=0 for all n, Bn(0)=0 for even n, and Bn(0)=8/π2(−1)(n−1)/2/n2 for odd n and their time derivatives at t=0 vanishing. In the figure we show how well the triangle wave is approximated by the series as we increase the number of terms we are including in the sum, by increasing the maximum value of n, so you can see that this series representation does indeed seem to work. In addition to the sum, we also show the individual terms.

**Lucas, please insert a still (non-animated) figure here showing the series converging**

To be able to explicitly show the solutions, and so this is not too cumbersome, we will restrict ourselves from here on out to just the first three terms in the sum. From the initial conditions written above we thus have

Ψ(x,t)=8π2cos(2πMpcvt)sin(2πMpcx)−89π2cos(6πMpcvt)sin(6πMpcx)+825π2cos(10πMpcvt)sin(10πMpcx)+....

The solution is illustrated in the animation.

**Lucas, please insert an animated figure here as described immediately above.**

**Lloyd: insert here some wrap-up of above section **

The Continuous Fourier Transform

We just saw a solution for an initial spatial configuration with wavelength λ=1 Mpc which can be represented as a sum over sines and cosines (just sines in this case) with an (infinite) set of discrete k values, specifically kn=2nπ/λ. Note that the spacing between k values in this case is Δk=2π/λ. For the more general situation of a function of space that is not periodic, we can think of it is a periodic function with infinite wavelength. As the wavelength goes to infinity, the Δk goes to zero. So we see we need a continuum of values of k. For the general case then we swap the sum over i with an integral over k:

Ψ(x,t)=∫∞0dk[A(k,t)cos(kx)+B(k,t)sin(kx)].

It turns out there is a more compact way of working with this decomposition into cosines and sines if we use complex numbers. We can write instead

Ψ(x,t)=∫∞−∞dk˜Ψ(k,t)eikx

which is a mathematical opertation known as the inverse Fourier transform. For Ψ(x,t) a real function, Eq. ??? and Eq. ??? are equivalent if we make the identification

A(k,t)=2Re˜Ψ(k,t) and B(k,t)=2ImΨ(k,t)

for k>0 where "Re" and "Im" indicate taking the real and imaginary parts respectively. Homework problem TBD is to prove these relationships are true.

Solving the Wave Equation in Fourier Space

You may already be familiar with a method for solving partial differential equations known as separation of variables. Using separation of variables to solve the wave equation, we would guess a solution of the form Ψ(x,t)=X(x)T(t). Plugging this into the wave equation yields two simple ODE's: one for T(t) and one for X(x). Now though, we'd like to introduce you to another way to analyze partial differential equations (PDE's): Fourier methods.

The basic idea here is that we transform from a basis in which the time evolution is complicated (one in which the field is described as a function of position), to a basis in which the time evolution is remarkably simple (one in which the field is described as a collection of Fourier modes). We do the time evolution in this new basis, and then we transform back to our original basis.

We will use the discrete version of the Fourier transform here, as that is perhaps an easier starting point to wrap one's mind around first. We include a discussion of the continuous Fourier transform, which is easy to understand as the continuum limit of the discrete version.

[To be done: all this needs to be translated to discrete from continuous and then we need to create a section on the continuum limit.]

We start off, in a manner that may seem a little backwards, by defining the inverse Fourier transformation:

h(x)=12π∫∞−∞dkeikx˜h(k).

The ˜h(k) are complex (have real and imaginary parts) and recall that exp(ikx)=cos(kx)+isin(kx). We start here because there is a theorem that states that a broad class of functions of x can all be written as sums over exp(ikx) for a continuum of values of k, and for appropriately chosen complex coefficients of the exp(ikx). That is, we can represent the information in a function h(x) by its Fourier coefficients ˜h(k), with the relationship between the two given by Equation???. The functions, exp(ikx) are known as Fourier modes. Since exp(ikx)=exp(ik(x+2π/k)) we see that a Fourier mode has a wavelength of 2π/k. We call k the 'wavenumber.'

One can do Fourier transforms in time or in space or both. Here we are only going to be doing Fourier transforms in space, although we will consider Fourier transforms in space at all points in time. To be explicit about this, we can rewrite Equation ??? to include a t argument of the functions:

h(x,t)=12π∫∞−∞dkeikx˜h(k,t).

It's the same transformation, but now we are explicit that we do this transformation at all values of t.

Recall that we claimed that the evolution of the ˜h(k,t) would be simple. To figure out what equation governs the evolution of these coefficients, we need to know how to figure out for a given h(x,t) what is ˜h(k,t). But we are going to return to leaving off the t dependence, for simplicity. We already know how to go from ˜h(k) to h(x), that is what we called the inverse Fourier transform, Equation ???. So we are looking now for the inverse of this, what we will naturally call the Fourier transform.

Let's work our way toward the Fourier transform by first pointing out an important property of Fourier modes: they are orthonormal. This means that if we integrate over all space one Fourier mode, e−ikx, multiplied by the complex conjugate of another Fourier mode eik′x the result is 2π times the Dirac delta function:

∫∞−∞dxe−ikxeik′x=2πδ(k−k′)

where the Dirac delta function is a continuum version of the Kronecker delta function, defined by its integral over k such that

∫∞−∞dkδ(k−k′)f(k)=f(k′).

You can loosely think of the Dirac delta function as being zero for all non-zero values of its argument and +∞ when its argument is zero.

From these equations one can derive what we call the Fourier transform:

˜h(k)=∫∞−∞dxe−ikxh(x)

and thus the answer to the question of how we deduced ˜h(k,t) from h(x,t).

Box 1.28.6

Exercise 27.1.1: Show that one can derive Equation ??? from Equations ???, ???, and ???.

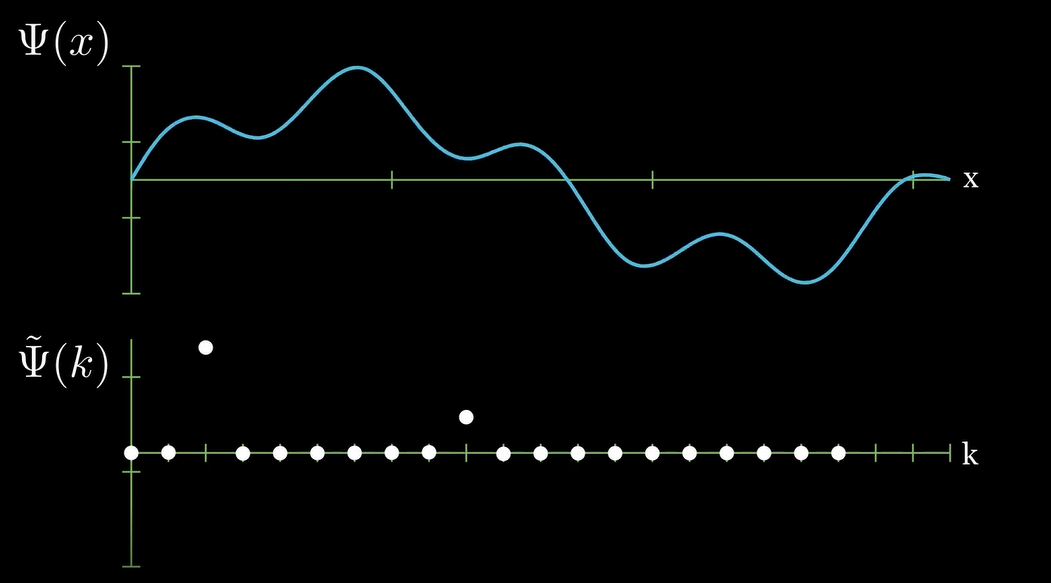

Before deriving the evolution equation for the Fourier coefficients, let's look at an example of a function in the position basis and what it looks like in the Fourier basis. The following image shows a wave on the top panel, Ψ(x), and the Fourier transform of that wave on the bottom panel. (Note that F(Ψ) indicates the operation of Fourier transforming the function Ψ(x); i.e., F(Ψ)=˜Ψ(k). Notice how the Fourier transform 'picks out' the two spatial frequencies of which the wave is composed.

[Problem: this is a discreet FT and we have only talked about continuum.]

For a Ψ(x,t) that obeys the wave equation, let's now find the equation that its Fourier coefficients, ˜Ψ(k,t), satisfy. Starting from the wave equation,

∂2Ψ(x,t)∂t2=v2∂2Ψ(x,t)∂x2,

and then substituting in the inverse Fourier transform Ψ(x,t)=12π∫∞−∞dk˜Ψ(k,t)e−ikx we find:

∂2∂t2∫∞−∞dk˜Ψ(k,t)e−ikx=v2∂2∂x2∫∞−∞dk˜Ψ(k,t)e−ikx

Distributing the derivatives gives:

∫∞−∞dk∂2˜Ψ(k,t)e−ikx∂t2=−∫∞−∞dk(kv)2˜Ψ(k,t)e−ikx.

We can then rearrange terms to find:

∫∞−∞dk[∂2˜Ψ(k,t)∂t2+(kv)2˜Ψ(k,t)]e−ikx=0.

It turns out that the only way the left-hand side can be zero for all values of x is if the quantity in square brackets is zero for all values of k (see Box below) so we get that

∂2˜Ψ(k,t)∂t2+(kv)2˜Ψ(k,t)=0.

Box 1.28.7

Exercise 27.2.1: Prove that if

∫∞−∞dkf(k)e−ikx=0

for all x, then f(k)=0 for all k.

First, multiply the left-hand side of Equation ??? by exp(−ik′x), integrate it over all k′, and identify the Dirac delta function to end up with:

∂2˜Ψ(k′)∂t2+(k′v)2˜Ψ(k′)=0.

Finally, note that since this is true for all k′ it's also true for all k.

Equation ??? is a very common differential equation. You've probably solved it many times! You may recognize it better if we let y=˜Ψ(k,t), so that it reads ¨y+k2v2y=0. We can easily write down a solution:

˜Ψ(k,t)=A(k)sin(kvt)+B(k)cos(kvt).

Thus our general solution back in the space basis is

Ψ(x,t)=12π∫∞−∞dk[A(k)sin(kvt)+B(k)cos(kvt)]eikx.

We can find A(k) and B(k) if we know Ψ(x,t) and ˙Ψ(x,t) at t=0 because

Ψ(x,t=0)=12π∫∞−∞dkB(k)eikx

and

˙Ψ(x,t=0)=12π∫∞−∞dkkvA(k)eikx.

Given these relationships we see that to get B(k) and A(k) we Fourier transform the initial value of Ψ and its time derivative:

B(k)=∫∞−∞dxΨ(x,t=0)e−ikx

and

A(k)=1kv∫∞−∞dx˙Ψ(x,t=0)e−ikx.

To summarize, we found that in a Fourier basis, rather than the original space basis, the wave equation simplifies from a partial differential equation to a set of uncoupled ordinary differential equations. The wave equation is easily solved in the Fourier basis and we provided the general solution. This general solution depends on two functions of k that can be derived from the initial conditions.

Consider the following initial conditions on our string Ψ(x,t=0)=sin(2x). This is a single wave with k = 2. Taking the Fourier transform, we find: F(Ψ(x,t=0))=δ(x−2). The Fourier transform is 1 where k = 2 and 0 otherwise. We see that over time, the amplitude of this wave oscillates with cos(2 v t). The solution to the wave equation for these initial conditions is therefore Ψ(x,t)=sin(2x)cos(2vt). This wave and its Fourier transform are shown below. The power spectrum is merely the Fourier transform squared.

Now consider we have initial conditions which are more complicated, but can be written as an infinite sum of sine waves as follows:

Ψ(x,t=0)=∞∑i=1Aisin(kix)

Taking the Fourier transform, we find the following sum of delta functions:

F(Ψ(x,t=0))=∞∑i=1Aiδ(k−ki)

Which oscillate in time according to:

F(Ψ(x,t))=∞∑i=1Aiδ(k−ki)cos(kivt)

Returning to real space we find:

Ψ(x,t)=∞∑i=1Aisin(kix)cos(kivt)

The takeaway here is that the solution to the wave equation can always be written as a sum of independent standing waves. Some examples are shown below. The top panel shows the wave and the bottom panel shows the Fourier transform of that wave. Notice how the evolution seems very complex in real space, but in Fourier space it is merely independent delta functions of oscillating amplitude. This is the beauty of using Fourier methods to analyze the wave equation. If you wanted to see the power spectrum, you would simply square the Fourier transform.

Exercise 1.28.8

Consider the heat equation for a straight rod: dΨdt=αd2Ψdt2, where Ψ(x,t) is the temperature at a certain point on the beam. Using the techniques from the previous section, find the evolution of Fourier modes. How can this physically be interpreted?

- Answer

-

We plug in the Fourier representation of Ψ into the heat equation:

ddt∫∞−∞F(Ψ)e−ikxdk=α∂2∂x2∫∞−∞F(Ψ)e−ikxdk

Distributing the derivatives and some algebra gives:

∫∞−∞[dF(Ψ)dte−ikx+αk2e−ikxF(Ψ)]dk=0

Which is satisfied if:

dF(Ψ)dt+αk2F(Ψ)=0

Using separation of variables, we find:

F(Ψ)=Ce−αk2t, where C is a constant determined by initial conditions.

Therefore, we see that higher frequencies decay faster. This makes sense, as we would expect spikes in temperature (high curvature) to disappear quickly, whereas more smooth temperature gradients will decay more slowly.