In many areas of engineering, signals are well-modeled as sinusoids. Also, devices that process these signals are often well-modeled as linear time-invariant (LTI) systems. The response of an LTI system to any linear combination of sinusoids is another linear combination of sinusoids having the same frequencies. In other words, (1) sinusoidal signals processed by LTI systems remain sinusoids and are not somehow transformed into square waves or some other waveform; and (2) we may calculate the response of the system for one sinusoid at a time, and then add the results to find the response of the system when multiple sinusoids are applied simultaneously. This property of LTI systems is known as superposition.

The analysis of systems that process sinusoidal waveforms is greatly simplified when the sinusoids are represented as phasors. Here is the key idea:

Definition: phasor

A phasor is a complex-valued number that represents a real-valued sinusoidal waveform. Specifically, a phasor has the magnitude and phase of the sinusoid it represents

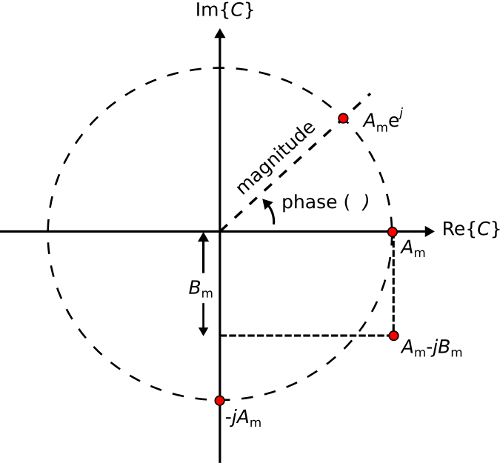

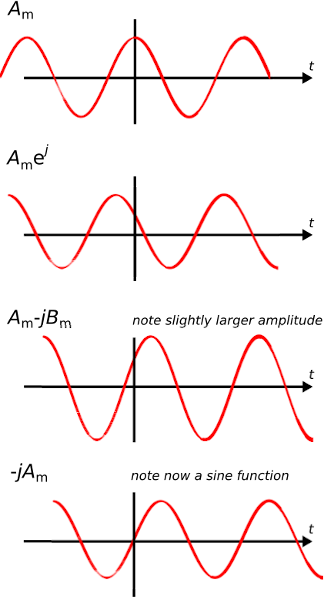

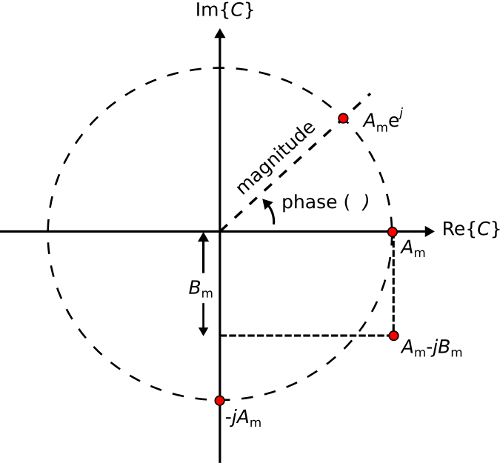

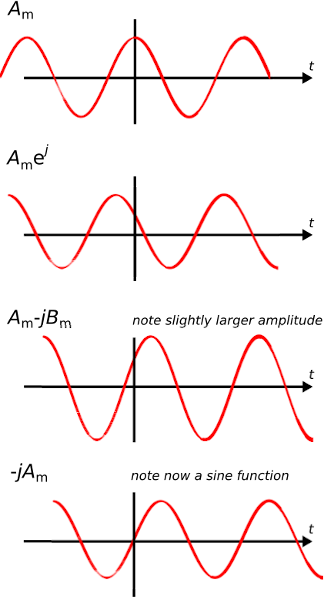

Figure \(\PageIndex{1}\) and \(\PageIndex{2}\) show some examples of phasors and the associated sinusoids. It is important to note that a phasor by itself is not the signal. A phasor is merely a simplified mathematical representation in which the actual, real-valued physical signal is represented as a complex-valued constant.

Figure \(\PageIndex{1}\): Examples of phasors, displayed here as points in the real-imaginary plane.

Equation \ref{1.7} is a completely general form for a physical (hence, real-valued) quantity varying sinusoidally with angular frequency \(\omega = 2\pi f\)

\[A ( t ; \omega ) = A _ { m } ( \omega ) \cos ( \omega t + \psi ( \omega ) ) \label{1.7} \]

where \(A_m(ω)\) is magnitude at the specified frequency, \(ψ(ω)\) is phase at the specified frequency, and \(t\) is time. Also, we require \(∂Am/∂t = 0\); that is, that the time variation of \(A(t)\) is completely represented by the cosine function alone. Now we can equivalently express \(A(t; ω)\) as a phasor \(C(ω)\):

\[C ( \omega ) = A _ { m } ( \omega ) e ^ { j \psi ( \omega ) } \label{1.8} \]

To convert this phasor back to the physical signal it represents, we (1) restore the time dependence by multiplying by \(e^{jωt}\), and then (2) take the real part of the result. In mathematical notation:

\[\boxed{A ( t ; \omega ) = \operatorname { Re } \left\{ C ( \omega ) e ^ { j \omega t } \right\}} \label{1.9} \]

To see why this works, simply substitute the right hand side of Equation \ref{1.8} into Equation \ref{1.9}. Then

\[\begin{align*} A ( t ) & = \operatorname { Re } \left\{ A _ { m } ( \omega ) e ^ { j \psi ( \omega ) } e ^ { j \omega t } \right\} \\[4pt] & = \operatorname { Re } \left\{ A _ { m } ( \omega ) e ^ { j ( \omega t + \psi ( \omega ) ) } \right\} \\[4pt] & = \operatorname { Re } \left\{ A _ { m } ( \omega ) [ \cos ( \omega t + \psi ( \omega ) ) + j \sin ( \omega t + \psi ( \omega ) )] \right\} \\[4pt] & = A _ { m } ( \omega ) \cos ( \omega t + \psi ( \omega ) ) \end{align*} \nonumber \]

as expected.

Figure \(\PageIndex{2}\): Sinusoids corresponding to the phasors shown in Figure \(\PageIndex{1}\)

It is common to write Equation \ref{1.9} as follows, dropping the explicit indication of frequency dependence:

\[C = A _ { m } e ^ { j \psi } \nonumber \]

This does not normally cause any confusion since the definition of a phasor requires that values of \(C\) and \(ψ\) are those that apply at whatever frequency is represented by the suppressed sinusoidal dependence \(e ^ { j \omega t } \).

Table \(\PageIndex{1}\) shows mathematical representations of the same phasors demonstrated in Figure \(\PageIndex{1}\) (and their associated sinusoidal waveforms in Figure \(\PageIndex{2}\)). It is a good exercise is to confirm each row in the table, transforming from left to right and vice-versa.

Table \(\PageIndex{1}\): Some examples of physical (real-valued) sinusoidal signals and the corresponding phasors. \(A_m\) and \(B_m\) are real-valued and constant with respect to \(t\)

| \(A(t)\) |

\(C\) |

| \(A_m \cos (ωt)\) |

\(A_m\) |

| \(A_m \cos (ωt + ψ)\) |

\(A_me^{ jψ}\) |

| \(A_m \sin (ωt) = A_m (cos ωt − \frac{π}{2})\) |

\(-jA_m\) |

| \(A_m \cos (ωt) + B_m \sin (ωt) = A_m \cos (ωt) + B_m \cos (ωt − \frac{π}{2}\) |

\(A_m − jB_m\) |

It is not necessary to use a phasor to represent a sinusoidal signal. We choose to do so because phasor representation leads to dramatic simplifications. For example:

- Calculation of the peak value from data representing \(A ( t ; \omega )\) requires a time-domain search over one period of the sinusoid. However, if you know \(C\), the peak value of \(A(t)\) is simply \(|C|\), and no search is required.

- Calculation of \(ψ\) from data representing \(A(t; ω)\) requires correlation (essentially, integration) over one period of the sinusoid. However, if you know \(C\), then \(ψ\) is simply the phase of \(C\), and no integration is required.

Furthermore, mathematical operations applied to \(A(t; ω)\) can be equivalently performed as operations on \(C\), and the latter are typically much easier than the former. To demonstrate this, we first make two important claims and show that they are true.

Example \(\PageIndex{1}\): Claim 1

Let \(C_1\) and \(C_2\) be two complex-valued constants (independent of \(t\)). Also, \(\operatorname { Re } \left\{ C _ { 1 } e ^ { j \omega t } \right\} = \operatorname { Re } \left\{ C _ { 2 } e ^ { j \omega t } \right\}\) for all \(t\). Then, \(C_1 = C_2\).

Proof

Evaluating at \(t = 0\) we find \( \operatorname{Re}\left\{C_{1}\right\}=\operatorname{Re}\left\{C_{2}\right\} \). Since \(C_1\) and \(C_2\) are constant with respect to time, this must be true for all \(t\). At \(t = π/(2ω)\) we find

\[\operatorname { Re } \left\{ C _ { 1 } e ^ { j \omega t } \right\} = \operatorname { Re } \left\{ C _ { 1 } \cdot j \right\} = - \operatorname { Im } \left\{ C _ { 1 } \right\} \nonumber \]

and similarly

\[\operatorname { Re } \left\{ C _ { 2 } e ^ { j \omega t } \right\} = \operatorname { Re } \left\{ C _ { 2 } \cdot j \right\} = - \operatorname { Im } \left\{ C _ { 2 } \right\} \nonumber \]

therefore \( \operatorname{Im}\left\{C_{1}\right\}=\operatorname{Im}\left\{C_{2}\right\} \). Once again: Since \(C_1\) and \(C_2\) are constant with respect to time, this must be true for all t. Since the real and imaginary parts of \(C_1\) and \(C_2\) are equal, \(C_1 = C_2\).

What does this mean?

We have just shown that if two phasors are equal, then the sinusoidal waveforms that they represent are also equal.

Example \(\PageIndex{2}\): Claim 2

For any real-valued linear operator \(\mathcal { T }\) and complex-valued quantity \(C\),

\[\mathcal { T } ( \operatorname { Re } \{ C \} ) = \operatorname { Re } \{ \mathcal { T } ( C ) \} \label{Ex1.1}. \]

Proof

Let \(C = c _ { r } + j c _ { i }\) where \(c_r\) and \(c_i\) are real-valued quantities, and evaluate the right side of Equation \ref{Ex1.1}:

\[\begin{aligned} \operatorname { Re } \{ \mathcal { T } ( C ) \} & = \operatorname { Re } \left\{ \mathcal { T } \left( c _ { r } + j c _ { i } \right) \right\} \\[4pt] & = \operatorname { Re } \left\{ \mathcal { T } \left( c _ { r } \right) + j \mathcal { T } \left( c _ { i } \right) \right\} \\[4pt] & = \mathcal { T } \left( c _ { r } \right) \\[4pt] & = \mathcal { T } ( \operatorname { Re } \{ C \} ) \end{aligned} \nonumber \]

What does this mean?

The operators that we have in mind for \(\mathcal { T }\) include addition, multiplication by a constant, differentiation, integration, and so on. Here’s an example with differentiation:

\[\begin{aligned} \operatorname { Re } \left\{ \frac { \partial } { \partial \omega } C \right\} & = \operatorname { Re } \left\{ \frac { \partial } { \partial \omega } \left( c _ { r } + j c _ { i } \right) \right\} = \frac { \partial } { \partial \omega } c _ { r } \\[4pt] \frac { \partial } { \partial \omega } \operatorname { Re } \{ C \} & = \frac { \partial } { \partial \omega } \operatorname { Re } \left\{ \left( c _ { r } + j c _ { i } \right) \right\} = \frac { \partial } { \partial \omega } c _ { r } \end{aligned} \nonumber \]

In other words, differentiation of a sinusoidal signal can be accomplished by differentiating the associated phasor, so there is no need to transform a phasor back into its associated real-valued signal in order to perform this operation.

Summary

Claims 1 and 2 together entitle us to perform operations on phasors as surrogates for the physical, real-valued, sinusoidal waveforms they represent. Once we are done, we can transform the resulting phasor back into the physical waveform it represents using Equation \ref{1.9}, if desired

However, a final transformation back to the time domain is usually not desired, since the phasor tells us everything we can know about the corresponding sinusoid

A skeptical student might question the value of phasor analysis on the basis that signals of practical interest are sometimes not sinusoidally-varying, and therefore phasor analysis seems not to apply generally. It is certainly true that many signals of practical interest are not sinusoidal, and many are far from it. Nevertheless, phasor analysis is broadly applicable. There are basically two reasons why this is so:

- Many signals, although not strictly sinusoidal, are “narrowband” and therefore well-modeled as sinusoidal. For example, a cellular telecommunications signal might have a bandwidth on the order of 10 MHz and a center frequency of about 2 GHz. This means the difference in frequency between the band edges of this signal is just 0.5% of the center frequency. The frequency response associated with signal propagation or with hardware can often be assumed to be constant over this range of frequencies. With some caveats, doing phasor analysis at the center frequency and assuming the results apply equally well over the bandwidth of interest is often a pretty good approximation.

- It turns out that phasor analysis is easily extensible to any physical signal, regardless of bandwidth. This is so because any physical signal can be decomposed into a linear combination of sinusoids – this is known as Fourier analysis. The way to find this linear combination of sinusoids is by computing the Fourier series, if the signal is periodic, or the Fourier Transform, otherwise. Phasor analysis applies to each frequency independently, and (invoking superposition) the results can be added together to obtain the result for the complete signal. The process of combining results after phasor analysis results is nothing more than integration over frequency; i.e..:

\[\int _ { - \infty } ^ { + \infty } A ( t ; \omega ) d \omega \nonumber \]

Using Equation \ref{1.9}, this can be rewritten:

\[\int _ { - \infty } ^ { + \infty } \operatorname { Re } \left\{ C ( \omega ) e ^ { j \omega t } \right\} d \omega \nonumber \]

We can go one step further using Claim 2:

\[\operatorname { Re } \left\{ \int _ { - \infty } ^ { + \infty } C ( \omega ) e ^ { j \omega t } d \omega \right\} \nonumber \]

The quantity in the curly braces is simply the Fourier transform of \(C(ω)\). Thus, we see that we can analyze a signal of arbitrarily-large bandwidth simply by keeping \(ω\) as an independent variable while we are doing phasor analysis, and if we ever need the physical signal, we just take the real part of the Fourier transform of the phasor. So not only is it possible to analyze any time-domain signal using phasor analysis, it is also often far easier than doing the same analysis on the time-domain signal direct

summary

Phasor analysis does not limit us to sinusoidal waveforms. Phasor analysis is not only applicable to sinusoids and signals that are sufficiently narrowband, but is also applicable to signals of arbitrary bandwidth via Fourier analysis.

Figure \(\PageIndex{1}\): Examples of phasors, displayed here as points in the real-imaginary plane.

Figure \(\PageIndex{1}\): Examples of phasors, displayed here as points in the real-imaginary plane.

Figure \(\PageIndex{2}\): Sinusoids corresponding to the phasors shown in Figure \(\PageIndex{1}\)

Figure \(\PageIndex{2}\): Sinusoids corresponding to the phasors shown in Figure \(\PageIndex{1}\)