2.3: Maxwell’s Demon, information, and computing

- Page ID

- 34697

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Before proceeding to other statistical distributions, I would like to make a detour to address one more popular concern about Equation (\(2.2.5 - 2.2.6\)) – the direct relation between entropy and information. Some physicists are still uneasy with entropy being nothing else than the (deficit of) information, though to the best of my knowledge, nobody has yet been able to suggest any experimentally verifiable difference between these two notions. Let me give one example of their direct relation.29 Consider a cylinder containing just one molecule (considered as a point particle), and separated into two halves by a movable partition with a door that may be opened and closed at will, at no energy cost – see Figure \(\PageIndex{1a}\). If the door is open and the system is in thermodynamic equilibrium, we do not know on which side of the partition the molecule is. Here the disorder, i.e. the entropy has the largest value, and there is no way to get, from a large ensemble of such systems in equilibrium, any useful mechanical energy.

Now, let us consider that we know (as instructed by, in Lord Kelvin’s formulation, an omniscient Maxwell’s Demon) on which side of the partition the molecule is currently located. Then we may close the door, trapping the molecule, so that its repeated impacts on the partition create, on average, a pressure force \(\pmb{\mathscr{F}}\) directed toward the empty part of the volume (in Figure \(\PageIndex{1b}\), the right one). Now we can get from the molecule some mechanical work, say by allowing the force \(\pmb{\mathscr{F}}\) to move the partition to the right, and picking up the resulting mechanical energy by some deterministic (zero-entropy) external mechanism. After the partition has been moved to the right end of the volume, we can open the door again (Figure \(\PageIndex{1c}\)), equalizing the molecule’s average pressure on both sides of the partition, and then slowly move the partition back to the middle of the volume – without its resistance, i.e. without doing any substantial work. With the continuing help by the Maxwell’s Demon, we can repeat the cycle again and again, and hence make the system perform unlimited mechanical work, fed “only” by the molecule’s thermal motion, and the information about its position – thus implementing the perpetual motion machine of the \(2^{nd}\) kind – see Sec. 1.6. The fact that such heat engines do not exist means that getting any new information, at non-zero temperature (i.e. at a substantial thermal agitation of particles) has a non-zero energy cost.

In order to evaluate this cost, let us calculate the maximum work per cycle that can be made by the Szilard engine (Figure \(\PageIndex{1}\)), assuming that it is constantly in the thermal equilibrium with a heat bath of temperature \(T\). Formula. (\(2.2.2\)) tells us that the information supplied by the demon (on what exactly half of the volume contains the molecule) is exactly one bit, \(I (2) = 1\). According to Equation (\(2.2.5 - 2.2.6\)), this means that by getting this information we are changing the entropy of our system by

\[ \Delta S_I = − \ln 2 . \label{50}\]

Now, it would be a mistake to plug this (negative) entropy change into Equation (\(1.3.6\)). First, that relation is only valid for slow, reversible processes. Moreover (and more importantly), this equation, as well as its irreversible version (\(1.4.18\)), is only valid for a fixed statistical ensemble. The change \(\Delta S_I\) does not belong to this category and may be formally described by the change of the statistical ensemble – from the one consisting of all similar systems (experiments) with an unknown location of the molecule, to a new ensemble consisting of the systems with the molecule in its certain (in Figure \(\PageIndex{1}\), left) half.30

Actually, discussion of another issue closely related to Maxwell’s Demon, namely of energy consumption at numerical calculations, was started earlier, in the 1960s. It was motivated by the exponential (Moore’s-law) progress of the digital integrated circuits, which has led in particular, to a fast reduction of the energy \(\Delta E\) “spent” (turned into heat) per one binary logic operation. In the recent generations of semiconductor digital integrated circuits, the typical \(\Delta E\) is still above \(10^{-17}\) J, i.e. still exceeds the room-temperature value of \(T\ln 2 \approx 4\times 10^{-21}\) J by several orders of magnitude. Still, some engineers believe that thermodynamics imposes this important lower limit on \(\Delta E\) and hence presents an insurmountable obstacle to the future progress of computation. Unfortunately, in the 2000s this delusion resulted in a substantial and unjustified shift of electron device research resources toward using “non charge degrees of freedom” such as spin (as if they do not obey the general laws of statistical physics!), so that the issue deserves at least a brief discussion.

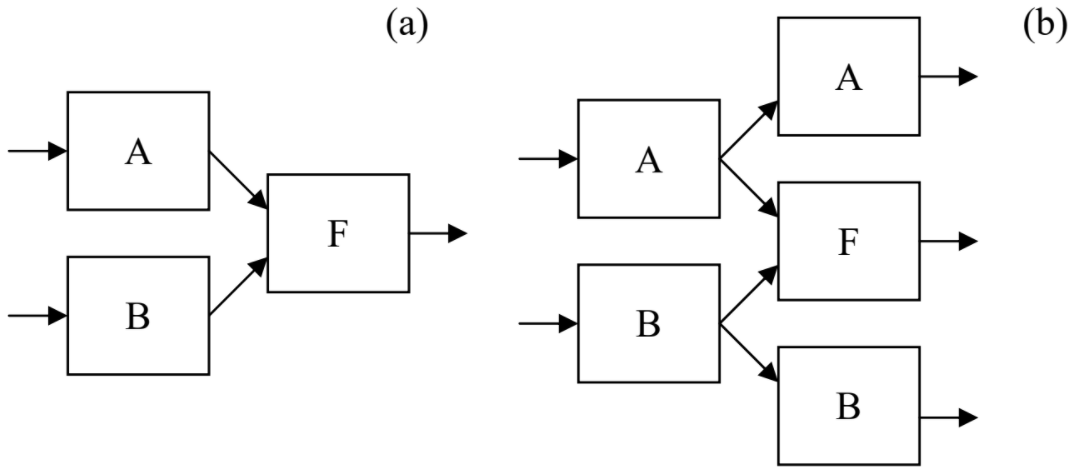

Let me believe that the reader of these notes understands that, in contrast to naïve popular talk, computers do not create any new information; all they can do is reshaping (“processing”) the input information, losing most of it on the go. Indeed, any digital computation algorithm may be decomposed into simple, binary logical operations, each of them performed by a circuit called the logic gate. Some of these gates (e.g., the logical NOT performed by inverters, as well as memory READ and WRITE operations) do not change the amount of information in the computer. On the other hand, such information-irreversible logic gates as two-input NAND (or NOR, or XOR, etc.) erase one bit at each operation, because they turn two input bits into one output bit – see Figure \(\PageIndex{2a}\).

In 1961, Rolf Landauer argued that each logic operation should turn into heat at least energy

Irreversible computation: energy cost

\[\boxed{ \Delta E_{min} = T \ln 2 \equiv k_B T_K \ln 2. } \label{51}\]

This result may be illustrated with the Szilard engine (Figure \(\PageIndex{1}\)), operated in a reversed cycle. At the first stage, with the door closed, it uses external mechanical work \(\Delta E = T\ln 2\) to reduce the volume in that the molecule is confined, from \(V\) to \(V/2\), pumping heat \(\Delta Q = \Delta E\) into the heat bath. To model a logically irreversible logic gate, let us now open the door in the partition, and thus lose one bit of information about the molecule’s position. Then we will never get the work \(T\ln 2\) back, because moving the partition back to the right, with the door open, takes place at zero average pressure. Hence, Equation (\ref{51}) gives a fundamental limit for energy loss (per bit) at the logically irreversible computation.

Before we leave Maxwell’s Demon behind, let me use it to revisit, for one more time, the relation between the reversibility of the classical and quantum mechanics of Hamiltonian systems and the irreversibility possible in thermodynamics and statistical physics. In the gedanken experiment shown in Figure \(\PageIndex{1}\), the laws of mechanics governing the motion of the molecule are reversible at all times. Still, at partition’s motion to the right, driven by molecular impacts, the entropy grows, because the molecule picks up the heat \(\Delta Q > 0\), and hence the entropy \(\Delta S = \Delta Q/T > 0\), from the heat bath. The physical mechanism of this irreversible entropy (read: disorder) growth is the interaction of the molecule with uncontrollable components of the heat bath, and the resulting loss of information about the motion of the molecule. Philosophically, such emergence of irreversibility in large systems is a strong argument against reductionism – a naïve belief that knowing the exact laws of Nature at the lowest, most fundamental level of its complexity, we can readily understand all phenomena on the higher levels of its organization. In reality, the macroscopic irreversibility of large systems is a good example35 of a new law (in this case, the \(2^{nd}\) law of thermodynamics) that becomes relevant on a substantially new, higher level of complexity – without defying the lower-level laws. Without such new laws, very little of the higher-level organization of Nature may be understood.