2.4: Canonical ensemble and the Gibbs distribution

( \newcommand{\kernel}{\mathrm{null}\,}\)

As was shown in Sec. 2 (see also a few problems of the list given in the end of this chapter), the microcanonical distribution may be directly used for solving some simple problems. However, its further development, also due to J. Gibbs, turns out to be much more convenient for calculations.

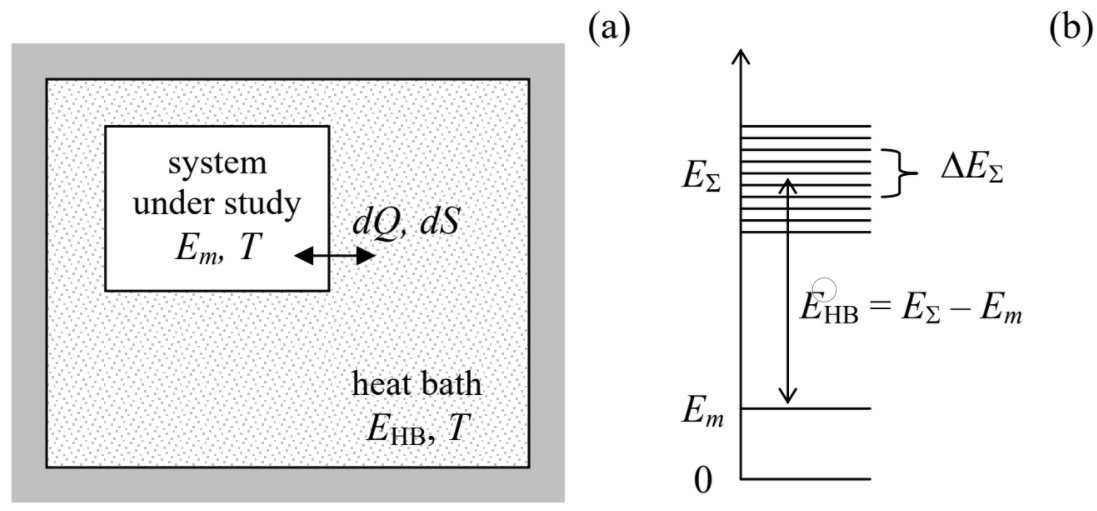

Let us consider a statistical ensemble of macroscopically similar systems, each in thermal equilibrium with a heat bath of the same temperature T (Figure 2.4.1a). Such an ensemble is called canonical.

It is intuitively evident that if the heat bath is sufficiently large, any thermodynamic variables characterizing the system under study should not depend on the heat bath’s environment. In particular, we may assume that the heat bath is thermally insulated, so that the total energy EΣ of the composite system, consisting of the system of our interest plus the heat bath, does not change in time. For example, if the system of our interest is in a certain (say, mth) quantum state, then the sum

EΣ=Em+EHB

is time-independent. Now let us partition the considered canonical ensemble of such systems into much smaller sub-ensembles, each being a microcanonical ensemble of composite systems whose total, time independent energies EΣ are the same – as was discussed in Sec. 2, within a certain small energy interval ΔEΣ<<EΣ – see Figure 2.4.1b. Due to the very large size of each heat bath in comparison with that of the system under study, the heat bath’s density of states gHB is very high, and ΔEΣ may be selected so that

1gHB<<ΔEΣ<<|Em−Em′|<<EHB,

where m and m′ are any states of the system of our interest.

According to the microcanonical distribution, the probabilities to find the composite system, within each of these microcanonical sub-ensembles, in any state are equal. Still, the heat bath energies EHB=EΣ–Em (Figure 2.4.1b) of the members of this sub-ensemble may be different – due to the difference in Em. The probability W(Em) to find the system of our interest (within the selected sub-ensemble) in a state with energy Em is proportional to the number ΔM of the corresponding heat baths in the sub-ensemble. As Figure 2.4.1b shows, in this case we may write ΔM=gHB(EHB)ΔEΣ. As a result, within the microcanonical sub-ensemble with the total energy EΣ,

Wm∝ΔM=gHB(EHB)ΔEΣ=gHB(EΣ−Em)ΔEΣ.

Let us simplify this expression further, using the Taylor expansion with respect to relatively small Em<<EΣ. However, here we should be careful. As we have seen in Sec. 2, the density of states of a large system is an extremely fast growing function of energy, so that if we applied the Taylor expansion directly to Equation (???), the Taylor series would converge for very small Em only. A much broader applicability range may be obtained by taking logarithms of both parts of Equation (???) first:

lnWm= const +ln[gHB(EΣ−Em)]+lnΔEΣ= const +SHB(EΣ−Em),

where the last equality results from the application of Equation (2.2.18) to the heat bath, and lnΔEΣ has been incorporated into the (inconsequential) constant. Now, we can Taylor-expand the (much more smooth) function of energy on the right-hand side, and limit ourselves to the two leading terms of the series:

lnWm≈ const +SHB|Em=0−dSHBdEHB|Em=0Em.

But according to Equation (1.2.6), the derivative participating in this expression is nothing else than the reciprocal temperature of the heat bath, which (due to the large bath size) does not depend on whether Em is equal to zero or not. Since our system of interest is in the thermal equilibrium with the bath, this is also the temperature T of the system – see Equation (1.2.5). Hence Equation (???) is merely

lnWm= const −EmT.

This equality describes a substantial decrease of Wm as Em is increased by ∼T, and hence our linear approximation (???) is virtually exact as soon as EHB is much larger than T – the condition that is rather easy to satisfy, because as we have seen in Sec. 2, the average energy per one degree of freedom of the system of the heat bath is also of the order of T, so that its total energy is much larger because of its much larger size.

Now we should be careful again because so far Equation (???) was only derived for a sub-ensemble with a certain fixed EΣ. However, since the second term on the right-hand side of Equation (???) includes only Em and T, which are independent of EΣ, this relation, perhaps with different constant terms, is valid for all sub-ensembles of the canonical ensemble, and hence for that ensemble as the whole. Hence for the total probability to find our system of interest in a state with energy Em, in the canonical ensemble with temperature T, we can write

Gibbs distribution:

Wm= const×exp{−EmT}≡1Zexp{−EmT}.

This is the famous Gibbs distribution,36 sometimes called the “canonical distribution”, which is arguably the summit of statistical physics,37 because it may be used for a straightforward (or at least conceptually straightforward :-) calculation of all statistical and thermodynamic variables of a vast range of systems.

Before illustrating this, let us first calculate the coefficient Z participating in Equation (???) for the general case. Requiring, per Equation (2.1.4), the sum of all Wm to be equal 1, we get

Statistical sum:

Z=∑mexp{−EmT},

where the summation is formally extended to all quantum states of the system, though in practical calculations, the sum may be truncated to include only the states that are noticeably occupied. The apparently humble normalization coefficient Z turns out to be so important for applications that it has a special name – or actually, two names: either the statistical sum or the partition function of the system. To appreciate the importance of Z, let us use the general expression (2.2.11) for entropy to calculate it for the particular case of the canonical ensemble, i.e. the Gibbs distribution (???) of the probabilities Wn:

S=−∑mWmlnWm=lnZZ∑mexp{−EmT}+1ZT∑mEmexp{−EmT}.

On the other hand, according to the general rule (2.1.7), the thermodynamic (i.e. ensemble-averaged) value E of the internal energy of the system is

E=∑mWmEm=1Z∑mEmexp{−EmT},

so that the second term on the right-hand side of Equation (???) is just E/T, while the first term equals lnZ, due to Equation (???). (By the way, using the notion of reciprocal temperature β≡1/T, with the account of Equation (???), Equation (???) may be also rewritten as

E from Z:

E=−∂(lnZ)∂β.

This formula is very convenient for calculations if our prime interest is the average internal energy E rather than F or Wn.) With these substitutions, Equation (???) yields a very simple relation between the statistical sum and the entropy of the system:

S=ET+lnZ.

Now using Equation (1.4.10), we see that Equation (???) gives a straightforward way to calculate the free energy F of the system from nothing other than its statistical sum (and temperature):

F from Z:

F≡E−TS=−TlnZ.

The relations (???) and (???) play the key role in the connection of statistics to thermodynamics, because they enable the calculation, from Z alone, of the thermodynamic potentials of the system in equilibrium, and hence of all other variables of interest, using the general thermodynamic relations – see especially the circular diagram shown in Figure 1.4.2, and its discussion in Sec. 1.4. Let me only note that to calculate the pressure P, e.g., from the second of Eqs. (1.4.12), we would need to know the explicit dependence of F, and hence of the statistical sum Z on the system’s volume V. This would require the calculation, by appropriate methods of either classical or quantum mechanics, of the dependence of the eigenenergies Em on the volume. Numerous examples of such calculations will be given later in the course.

Before proceeding to first such examples, let us notice that Eqs. (???) and (???) may be readily combined to give an elegant equality,

exp{−FT}=∑mexp{−EmT}.

This equality, together with Equation (???), enables us to rewrite the Gibbs distribution (???) in another form:

Wm=exp{−F−EmT},

more convenient for some applications. In particular, this expression shows that since all probabilities Wm are below 1, F is always lower than the lowest energy level. Also, Equation (???) clearly shows that the probabilities Wm do not depend on the energy reference, i. e. on an arbitrary constant added to all Em – and hence to E and F.