1.13: Fitting a Least Squares Polynomial to a Set of Observational Points

( \newcommand{\kernel}{\mathrm{null}\,}\)

I shall start by assuming that the values of x are known to a high degree of precision, and all the errors are in the values of y. In other words, I shall calculate a least squares polynomial regression of y upon x. In fact I shall show how to calculate a least squares quadratic regression of y upon x, a quadratic polynomial representing, of course, a parabola. What we want to do is to calculate the coefficients a0, a1, a2 such that the sum of the squares of the residual is least, the residual of the ith point being

Ri=yi−(a0+a1xi+a2x21).

You have N simultaneous linear Equations of this sort for the three unknowns a0, a1 and a2. You already know how to find the least squares solution for these, and indeed, after having read Section 1.8, you already have a program for solving the Equations. (Remember that here the unknowns are a0, a1 and a2 – not x! You just have to adjust your notation a bit.) Thus there is no difficulty in finding the least squares quadratic regression of y upon x, and indeed the extension to polynomials of higher degree will now be obvious.

As an Exercise, here are some points that I recently had in a real application:

xy395.1171.0448.1289.0517.7399.0583.3464.0790.2620.0

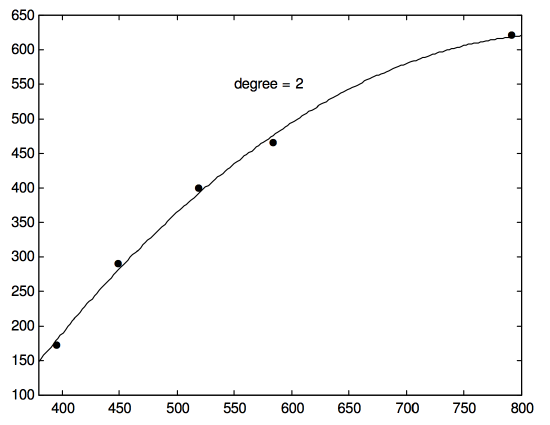

Draw these on a sheet of graph paper and draw by hand a nice smooth curve passing as close as possible to the point. Now calculate the least squares parabola (quadratic regression of y upon x) and see how close you were. I make it y=−961.34+3.7748x−2.247×10−3x2. It is shown in Figure I.6C.

I now leave you to work out how to fit a least squares cubic (or indeed any polynomial) regression of y upon x to a set of data points. For the above data, I make the cubic fit to be

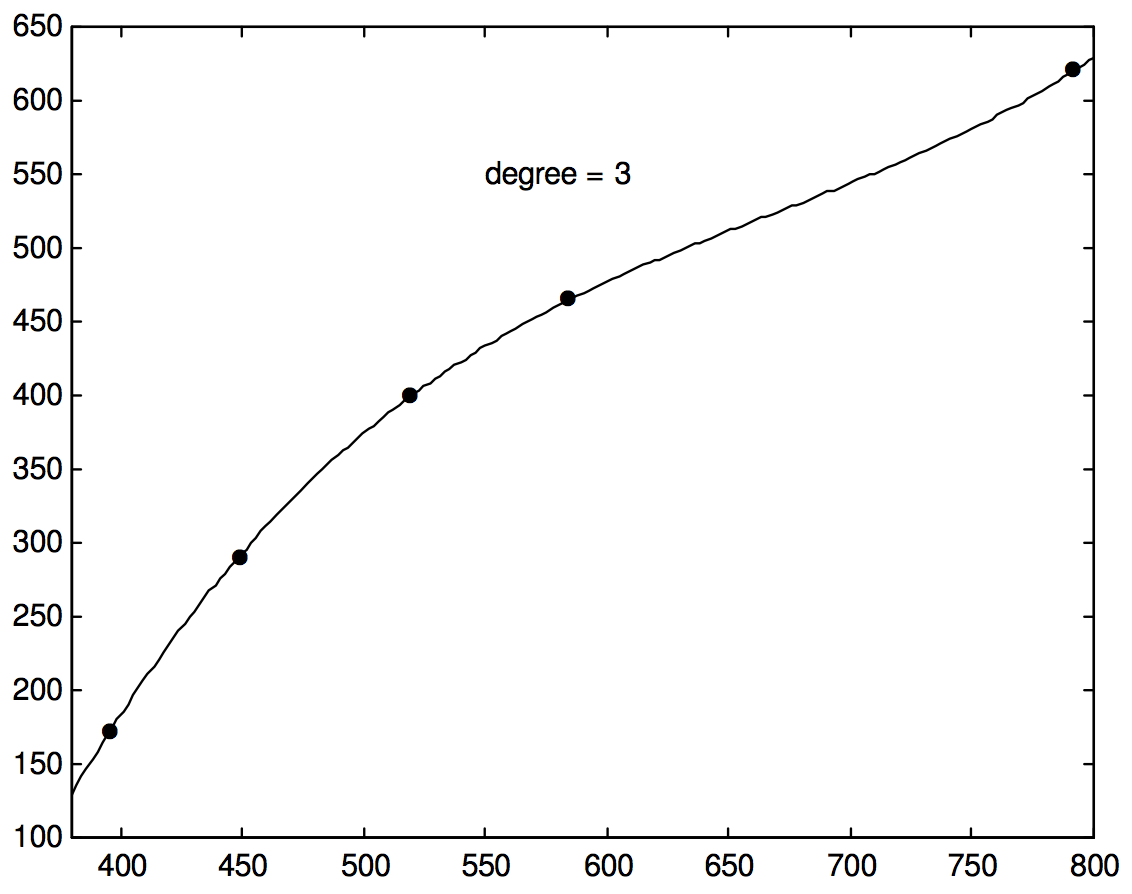

y=−2537.605+12.4902x−0.017777x2+8.89×10−6x3.

This is shown in Figure I.6D, and, on the scale of this drawing it cannot be distinguished (within the range covered by x in the figure) from the quartic Equation that would go exactly through all five points.

The cubic curve is a “better” fit than either the quadratic curve or a straight line in the sense that, the higher the degree of polynomial, the closer the fit and the less the residuals. But higher degree polynomials have more “wiggles”, and you have to ask yourself whether a high-degree polynomial with lots of “wiggles” is really a realistic fit, and maybe you should be satisfied with a quadratic fit. Above all, it is important to understand that it is very dangerous to use the curve that you have calculated to extrapolate beyond the range of x for which you have data – and this is especially true of higher-degree polynomials.

FIGURE I.6C

FIGURE I.6D

What happens if the errors in x are not negligible, and the errors in x and y are comparable in size? In that case you want to plot a graph of y against x on a scale such that the unit for x is equal to the standard deviation of the x-residuals from the chosen polynomial and the unit for y is equal to the standard deviation of the y-residuals from the chosen polynomial. For a detailed and thorough account of how to do this, I refer you to a paper by D. York in Canadian Journal of Physics, 44, 1079 (1966).