7.6: Density Matrix Approach

( \newcommand{\kernel}{\mathrm{null}\,}\)

The main alternative approach to the dynamics of open quantum systems, which is essentially a generalization of the one discussed in Sec. 2, is to extract the final results of interest from the dynamics of the density operator of our system s. Let us discuss this approach in detail. 54

We already know that the density matrix allows the calculation of the expectation value of any observable of the system s - see Eq. (5). However, our initial recipe (6) for the density matrix element calculation, which requires the knowledge of the exact state (2) of the whole Universe, is not too practicable, while the von Neumann equation (66) for the density matrix evolution is limited to cases in which probabilities Wj of the system states are fixed - thus excluding such important effects as the energy relaxation. However, such effects may be analyzed using a different assumption - that the system of interest interacts only with a local environment that is very close to its thermally-equilibrium state described, in the stationary-state basis, by a diagonal density matrix with the elements (24).

This calculation is facilitated by the following general observation. Let us number the basis states of the full local system (the system of our interest plus its local environment) by l, and use Eq. (5) to write ⟨A⟩=Tr(ˆAˆwl)≡∑l,l′All′wll=∑l,l′⟨l|ˆA|l′⟩⟨l′|ˆwl|l⟩, where ˆwl is the density operator of this local system. At a weak interaction between the system s and the local environment e, their states reside in different Hilbert spaces, so that we can write |l⟩=|sj⟩⊗|ek⟩, and if the observable A depends only on the coordinates of the system s of our interest, we may reduce Eq. (157) to the form similar to Eq. (5): ⟨A⟩=∑j,j′,k,k′⟨ek|⊗⟨sj|ˆA|sj′⟩⊗|ek′⟩⟨ek′|⊗⟨sj′|ˆwl|sj⟩⊗|ek⟩=∑j,j′Aij′⟨sj′(∑k⊗⟨ek|ˆwl|ek⟩⊗)∣sj⟩=Trj(ˆAˆw), where ˆw≡∑k⟨ek|ˆwl|ek⟩=Trkˆwl, showing how exactly the density operator ˆw of the system s may be calculated from ˆwl.

Now comes the key physical assumption of this approach: since we may select the local environment e to be much larger than the system s of our interest, we may consider the composite system l as a Hamiltonian one, with time-independent probabilities of its stationary states, so that for the description of the evolution in time of its full density operator ˆwl (again, in contrast to that, ˆw, of the system of our interest) we may use the von Neumann equation (66). Partitioning its right-hand side in accordance with Eq. (68), we get: iℏ˙ˆwl=[ˆHs,ˆwl]+[ˆHe,ˆwl]+[ˆHint,ˆwl]. The next step is to use the perturbation theory to solve this equation in the lowest order in ˆHint , which would yield, for the evolution of w, a non-vanishing contribution due to the interaction. For that, Eq. (161) is not very convenient, because its right-hand side contains two other terms, of a much larger scale than the interaction Hamiltonian. To mitigate this technical difficulty, the interaction picture that was discussed at the end of Sec.4.6, is very natural. (It is not necessary though, and I will use this picture mostly as an exercise of its application - unfortunately, the only example I can afford in this course.)

As a reminder, in that picture (whose entities will be marked with index "I", with the unmarked operators assumed to be in the Schrödinger picture), both the operators and the state vectors (and hence the density operator) depend on time. However, the time evolution of the operator of any observable A is described by an equation similar to Eq. (67), but with the unperturbed part of the Hamiltonian only - see Eq. (4.214). In model (68), this means iℏ˙ˆAI=[ˆAI,ˆH0]. where the unperturbed Hamiltonian consists of two parts defined in different Hilbert spaces: ˆH0≡ˆHs+ˆHe. On the other hand, the state vector’s dynamics is governed by the interaction evolution operator ˆu1 that obeys Eqs. (4.215). Since this equation, using the interaction-picture Hamiltonian (4.216), ˆHI≡ˆu†0ˆHintˆu0, is absolutely similar to the ordinary Schrödinger equation using the full Hamiltonian, we may repeat all arguments given at the beginning of Sec.3 to prove that the dynamics of the density operator in the interaction picture of a Hamiltonian system is governed by the following analog of the von Neumann equation (66): iℏ˙ˆwI=[ˆHI,ˆwI] where the index l is dropped for the notation simplicity. Since this equation is similar in structure (with the opposite sign) to the Heisenberg equation (67), we may use the solution Eq. (4.190) of the latter equation to write its analog: ˆwI(t)=ˆuI(t,0)ˆwl(0)ˆu†I(t,0). It is also straightforward to verify that in this picture, the expectation value of any observable A may be found from an expression similar to the basic Eq. (5): ⟨A⟩=Tr(ˆAIˆwI), showing again that the interaction and Schrödinger pictures give the same final results.

In the most frequent case of factorable interaction (90), 55 Eq. (162) is simplified for both operators participating in that product − for each one in its own way. In particular, for ˆA=ˆx, it yields

iℏ˙ˆxI=[ˆx1,ˆH0]≡[ˆxI,ˆHs]+[ˆxI,ˆHe]. Since the coordinate operator is defined in the Hilbert space of our system s, it commutes with the Hamiltonian of the environment, so that we finally get iℏ˙ˆxI=[ˆxI,ˆHs]. On the other hand, if ˆA=ˆF, this operator is defined in the Hilbert space of the environment, and commutes with the Hamiltonian of the unperturbed system s. As a result, we get iℏ˙ˆFI=[ˆFI,ˆHe]. This means that with our time-independent unperturbed Hamiltonians, ˆHs and ˆHe, the time evolution of the interaction-picture operators is rather simple. In particular, the analogy between Eq. (170) and Eq. (93) allows us to immediately write the following analog of Eq. (94): ˆFI(t)=exp{+iℏˆHet}ˆF(0)exp{−iℏˆHet}, so that in the stationary-state basis n of the environment, (ˆFI)nn′(t)=exp{+iℏEnt}Fnn′(0)exp{−iℏEn′t}≡Fnn′(0)exp{−iEn−En′ℏt}, and similarly (but in the basis of the stationary states of system s ) for operator ˆx. As a result, the righthand side of Eq. (164) may be also factored: ˆHI(t)≡ˆu†0(t,0)ˆHintˆu0(t,0)=exp{iℏ(ˆHs+ˆHe)t}(−ˆxˆF)exp{−iℏ(ˆHs+ˆHe)t}=−(exp{+iℏˆHst}ˆxexp{−iℏˆHst})(exp{+iℏˆHet}ˆF(0)exp{−iℏˆHet})≡−ˆxI(t)ˆFI(t). So, the transfer to the interaction picture has taken some time, but now it enables a smooth ride. 56 Indeed, just as in Sec. 4, we may rewrite Eq. (165) in the integral form: ˆwI(t)=1iℏ∫t−∞[ˆHI(t′),ˆwI(t′)]dt′; plugging this result into the right-hand side of Eq. (165), we get ˙ˆwI(t)=−1ℏ2∫t−∞[ˆHI(t),[ˆHI(t′),ˆwI(t′)]]dt′=−1ℏ2∫t−∞[ˆx(t)ˆF(t),[ˆx(t′)ˆF(t′),ˆwI(t′)]]dt′, where, for the notation’s brevity, from this point on I will strip the operators ˆx and ˆF of their index "I". (I hope their time dependence indicates the interaction picture clearly enough.)

So far, this equation is exact (and cannot be solved analytically), but this is a good time to notice that even if we approximate the density operator on its right-hand side by its unperturbed, factorable "value" (corresponding to no interaction between the system s and its thermally-equilibrium environment e ), 57 ˆw1(t′)→ˆw(t′)ˆwe, with ⟨en|ˆwe|en′⟩=Wnδnn′ where en are the stationary states of the environment and Wn are the Gibbs probabilities (24), Eq. (175) still describes nontrivial time evolution of the density operator. This is exactly the first non-vanishing approximation (in the weak interaction) we have been looking for. Now using Eq. (160), we find the equation of evolution of the density operator of the system of our interest: ˙ˆw(t)=−1ℏ2∫t−∞Trn[ˆx(t)ˆF(t),[ˆx(t′)ˆF(t′),ˆw(t′)ˆwe]]dt′, where the trace is over the stationary states of the environment. To spell out the right-hand side of Eq. (177), note again that the coordinate and force operators commute with each other (but not with themselves at different time moments!) and hence may be swapped at will, so that we may write Trn[…,[…,…]]=ˆx(t)ˆx(t′)ˆw(t′)Trn[ˆF(t)ˆF(t′)ˆwe]−ˆx(t)ˆw(t′)ˆx(t′)Trn[ˆF(t)ˆweˆF(t′)]−ˆx(t′)ˆw(t′)ˆx(t)Trn[ˆF(t′)ˆweˆF(t)]+ˆw(t′)ˆx(t′)ˆx(t)Trn[ˆweˆF(t′)ˆF(t)]=ˆx(t)ˆx(t′)ˆw(t′)∑n,n′Fnn′(t)Fn′n(t′)Wn−ˆx(t)ˆw(t′)ˆx(t′)∑n,n′Fnn′(t)Wn′Fn′n(t′)−ˆx(t′)ˆw(t′)ˆx(t)∑n,n′Fnn′(t′)Wn′Fn′n(t)+ˆw(t′)ˆx(t′)ˆx(t)∑n,n′WnFnn′(t′)Fn′n(t). Since the summation over both indices n and n ’ in this expression is over the same energy level set (of all stationary states of the environment), we may swap these indices in any of the sums. Doing this only in the terms including the factors Wn, we turn them into Wn, so that this factor becomes common: Trn[…,[…,…]]=∑n,n′Wn[ˆx(t)ˆx(t′)ˆw(t′)Fnn′(t)Fn′n(t′)−ˆx(t)ˆw(t′)ˆx(t′)Fn′n(t)Fnn′(t′)−ˆx(t′)ˆwˆx(t)Fn′n(t′)Fnn′(t)+ˆwˆx(t′)ˆx(t)Fnn′(t′)Fn′n(t)]. Now using Eq. (172), we get Trn[…,[…,…]]=∑n,n′Wn|Fnn′|2×[ˆx(t)ˆx(t′)ˆw(t′)exp{+i˜E(t−t′)ℏ}−ˆx(t)ˆw(t′)ˆx(t′)exp{−i˜E(t−t′)ℏ}−ˆx(t′)ˆw(t′)ˆx(t)exp{+i˜E(t−t′)ℏ}+ˆw(t′)ˆx(t′)ˆx(t)exp{−i˜E(t−t′)ℏ}]≡∑n,n′Wn|Fnn′|2cos˜E(t−t′)ℏ[ˆx(t),[ˆx(t′),ˆw(t′)]]+i∑n,n′Wn|Fnn′|2sin˜E(t−t′)ℏ[ˆx(t),{ˆx(t′),ˆw(t′)}] Comparing the two double sums participating in this expression with Eqs. (108) and (111), we see that they are nothing else than, respectively, the symmetrized correlation function and the temporal Green’s function (multiplied by ℏ/2 ) of the time-difference argument τ=t−t′≥0. As the result, Eq. (177) takes a compact form: ˙ˆw(t)=−1ℏ2∫t−∞KF(t−t′)[ˆx(t),[ˆx(t′),ˆw(t′)]]dt′−i2ℏ∫t−∞G(t−t′)[ˆx(t),{ˆx(t′),ˆw(t′)}]dt′ Let me hope that the readers (especially the ones who have braved through this derivation) enjoy this beautiful result as much as I do. It gives an equation for the time evolution of the density operator of the system of our interest (s), with the effects of its environment represented only by two real, c-number functions of τ : one (KF) describing the fluctuation force exerted by the environment, and the other one (G) representing its ensemble-averaged environment’s response to the system’s evolution. And most spectacularly, these are exactly the same functions that participate in the alternative, HeisenbergLangevin approach to the problem, and hence related to each other by the fluctuation-dissipation theorem (134).

After a short celebration, let us acknowledge that Eq. (181) is still an integro-differential equation, and needs to be solved together with Eq. (169) for the system coordinate’s evolution. Such equations do not allow explicit analytical solutions, besides a few very simple (and not very interesting) cases. For most applications, further simplifications should be made. One of them is based on the fact (which was already discussed in Sec. 3) that both environmental functions participating in Eq. (181) tend to zero when their argument τ becomes much larger than the environment’s correlation time τc, independent of the system-to-environment coupling strength. If the coupling is sufficiently weak, the time scales Tnn ’ of the evolution of the density matrix elements, following from Eq. (181), are much longer than this correlation time, and also the characteristic time scale of the coordinate operator’s evolution. In this limit, all arguments t′ of the density operator, giving substantial contributions to the right-hand side of Eq. (181), are so close to t that it does not matter whether its argument is t ’ or just t. This simplification, w(t′)≈w(t), is known as the Markov approximation. 58However, this approximation alone is still insufficient for finding the general solution of Eq. (181). Substantial further progress is possible in two important cases. The most important of them is when the intrinsic Hamiltonian ˆHs of the system s of our of interest does not depend on time explicitly and has a discrete eigenenergy spectrum En59 with well-separated levels: |En−En′|>>ℏTnn′. Let us see what does this condition yield for Eq. (181), rewritten for the matrix elements in the stationary state basis, in the Markov approximation: ˙wnn′=−1ℏ2∫t−∞KF(t−t′)[ˆx(t),[ˆx(t′),ˆw]]nn′dt′−i2ℏ∫t−∞G(t−t′)[ˆx(t),{ˆx(t′),ˆw}]nn′dt′. After spelling out the commutators, the right-hand side of this expression includes four operator products, which differ "only" by the operator order. Let us first have a look at one of these products, [ˆx(t)ˆx(t′)ˆw]nn′≡∑m,m′xnm(t)xmm′(t′)wm′n′, where the indices m and m ’ run over the same set of stationary states of the system s of our interest as the indices n and n ’. According to Eq. (169) with a time-independent Hs, the matrix elements xnn′ ’ (in the stationary state basis) oscillate in time as exp{iωnnt}, so that [ˆx(t)ˆx(t′)ˆw]mn′=∑m,m′xnmxmm′,exp{i(ωnmt+ωmm′t′)}wm′n′, where on the right-hand side, the coordinate matrix elements are in the Schrödinger picture, and the usual notation (6.85) is used for the quantum transition frequencies: ℏωnn′≡En−En′. According to the condition (182), frequencies ωnn′ with n≠n′ are much higher than the speed of evolution of the density matrix elements (in the interaction picture!) - on both the left-hand and righthand sides of Eq. (183). Hence, on the right-hand side of Eq. (183), we may keep only the terms that do not oscillate with these frequencies ωnn, because rapidly-oscillating terms would give negligible contributions to the density matrix dynamics. 60 For that, in the double sum (185) we should save only the terms proportional to the difference (t−t′) because they will give (after the integration over t′ ) a slowly changing contribution to the right-hand side. 61 These terms should have ωnm+ωmm′=0, i.e. (En− Em)+(Em−Em′)≡En−Em′=0. For a non-degenerate energy spectrum, this requirement means m′=n; as a result, the double sum is reduced to a single one: [ˆx(t)ˆx(t′)ˆw]nn′≈wnn′∑mxnmxmnexp{iωnm(t−t′)}≡wnn′∑m|xnm|2exp{iωnm(t−t′)} Another product, [ˆwˆx(t′)ˆx(t)]nn′, which appears on the right-hand side of Eq. (183), may be simplified absolutely similarly, giving [ˆwˆx(t′)ˆx(t)]nn′≈∑m|xn′m|2exp{iωn′m(t′−t)}wnn′. These expressions hold whether n and n ’ are equal or not. The situation is different for two other products on the right-hand side of Eq. (183), with w sandwiched between x(t) and x(t′). For example, [ˆx(t)ˆwˆx(t′)]nn′=∑m,m′xnm(t)wmm′xm′n′(t′)=∑m,m′xnmwmm′xm′n′exp{i(ωnmt+ωm′n′t′)}. For this term, the same requirement of having a fast oscillating function of (t−t′) only, yields a different condition: ωnm+ωm′n′=0, i.e. (En−Em)+(Em′−En′)=0. Here the double sum’s reduction is possible only if we make an additional assumption that all interlevel energy distances are unique, i.e. our system of interest has no equidistant levels (such as in the harmonic oscillator). For the diagonal elements (n=n′), the RWA requirement is reduced to m=m ’, giving sums over all diagonal elements of the density matrix: [ˆx(t)ˆwˆx(t′)]nn=∑m|xnm|2exp{iωnm(t−t′)}wmm. (Another similar term, [ˆx(t′)ˆwˆx(t)]nn, is just a complex conjugate of (191).) However, for off-diagonal matrix elements (n≠n′), the situation is different: Eq. (190) may be satisfied only if m=n and also m ’ =n′, so that the double sum is reduced to just one, non-oscillating term: [ˆx(t)ˆwˆx(t′)]nn′=xnnwnn′xn′n′, for n≠n′. The second similar term, [ˆx(t′)ˆwˆx(t)]nn, is exactly the same, so that in one of the integrals of Eq. (183), these terms add up, while in the second one, they cancel.

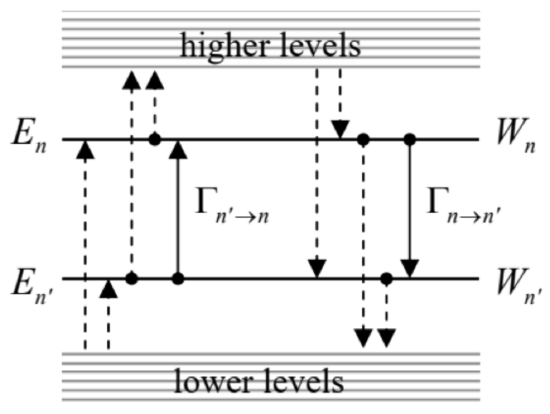

This is why the final equations of evolution look differently for diagonal and off-diagonal elements of the density matrix. For the former case (n=n′), Eq. (183) is reduced to the so-called master equation 62 relating diagonal elements wnn of the density matrix, i.e. the energy level occupancies Wn:63 ˙Wn=∑m≠n|xnm|2∫∞0[−1ℏ2KF(τ)(Wn−Wm)(exp{iωnmτ}+exp{−iωnmτ})−i2ℏG(τ)(−Wn−Wm)(exp{iωnmτ}−exp{−iωnmτ})]dτ where τ≡t−t′. Changing the summation index notation from m to n ’, we may rewrite the master equation in its canonical form ˙Wn=∑n′≠n(Γn′→nWn′−Γn→n′Wn) where the coefficients Γn′→n≡|xnn′|2∫∞0[2ℏ2KF(τ)cosωnn′τ−1ℏG(τ)sinωnn′τ]dt′, are called the interlevel transition rates. 64 Eq. (194) has a very clear physical meaning of the level occupancy dynamics (i.e. the balance of the probability flows ΓW ) due to quantum transitions between the energy levels (see Fig. 7), in our current case caused by the interaction between the system of our interest and its environment.

The Fourier transforms (113) and (123) enable us to express the two integrals in Eq. (195) via, respectively, the symmetrized spectral density SF(ω) of environment force fluctuations and the imaginary part χ′′(ω) of the generalized susceptibility, both at frequency ω=ωnn. After that we may use the fluctuation-dissipation theorem (134) to exclude the former function, getting finally 65

Transition rates via χ′′(ω)Γn′→n=1ℏ|xnn′|2χ′′(ωnn′)(cothℏωnn′2kBT−1)≡2ℏ|xnn′|2χ′′(ωnn′)exp{(En−En′)/kBT}−1.

Note that since the imaginary part χ " of the generalized susceptibility is an odd function of frequency, Eq. (196) is in compliance with the Gibbs distribution for arbitrary temperature. Indeed, according to this equation, the ratio of the "up" and "down" rates for each pair of levels equals Γn′→nΓn→n′=χ′′(ωnn′)exp{(En−En′)/kBT}−1/χ′′(ωn′n)exp{(En′−En)/kBT}−1≡exp{−En−En′kBT} On the other hand, according to the Gibbs distribution (24), in thermal equilibrium the level populations should be in the same proportion. Hence, Eq. (196) complies with the so-called detailed balance equation, WnΓn→n′=Wn′Γn′→n, valid in the equilibrium for each pair {n,n′}, so that all right-hand sides of all Eqs. (194), and hence the time derivatives of all Wn vanish - as they should. Thus, the stationary solution of the master equations indeed describes the thermal equilibrium correctly.

The system of master equations (194), frequently complemented by additional terms on their right-hand sides, describing interlevel transitions due to other factors (e.g., by an external ac force with a frequency close to one of ωnn ’), is the key starting point for practical analyses of many quantum systems, notably including optical quantum amplifiers and generators (lasers). It is important to remember that they are strictly valid only in the rotating-wave approximation, i.e. if Eq. (182) is well satisfied for all n and n ’ of substance.For a particular but very important case of a two-level system (with, say, E1>E2 ), the rate Γ1→2 may be interpreted (especially in the low-temperature limit kBT<<ℏ12=E1−E2, when Γ1→2≫Γ2→1 ) as the reciprocal characteristic time 1/T1≡Γ1→2 of the energy relaxation process that brings the diagonal elements of the density matrix to their thermally-equilibrium values (24). For the Ohmic dissipation described by Eqs. (137)-(138), Eq. (196) yields 1T1≡Γ1→2=2ℏ2|x12|2η×{ℏω12, for kBT<<ℏω12,kBT, for ℏω12<<kBT. This relaxation time T1 should not be confused with the characteristic time T2 of the off-diagonal element decay, i.e. dephasing, which was already discussed in Sec. 3. In this context, let us see what do Eqs. (183) have to say about the dephasing rates. Taking into account our intermediate results (187)(192), and merging the non-oscillating components (with m=n and m=n′ ) of the sums Eq. (187) and (188) with the terms (192), which also do not oscillate in time, we get the following equation: 66 ˙wnn′=−{∫∞0[1ℏ2KF(τ)(∑m≠n|xnm|2exp{iωnmτ}+∑m≠n′|xn′m|2exp{−iωn′mτ}+(xnn−xn′′′)2)+i2ℏG(τ)(∑m≠n|xnm|2exp{iωnmτ}−∑m≠n′|xn′m|2exp{−iωn′mτ})]dτ}wnn′, for n≠n′. In contrast with Eq. (194), the right-hand side of this equation includes both a real and an imaginary part, and hence it may be represented as ˙wnn′=−(1/Tnn′+iΔnn′)wnn′, where both factors 1/Tnn ’ and Δnn ’ are real. As Eq. (201) shows, the second term in the right-hand side of this equation causes slow oscillations of the matrix elements wnn, which, after returning to the Schrödinger picture, add just small corrections 67 to the unperturbed frequencies (186) of their oscillations, and are not important for most applications. More important is the first term, proportional to 1Tnn′=∫∞0[1ℏ2KF(τ)(∑m≠n|xnm|2cosωnmτ+∑m≠n′|xn′m|2cosωn′mτ+(xnn−xn′n′)2) −12ℏG(τ)(∑m≠n|xnm|2sinωnmτ+∑m≠n′|xn′m|2sinωn′mτ)]dτ, for n≠n′ because it describes the effect completely absent without the environment coupling: exponential decay of the off-diagonal matrix elements, i.e. the dephasing. Comparing the first two terms of Eq. (202) with Eq. (195), we see that the dephasing rates may be described by a very simple formula: 1Tnn′=12(∑m≠nΓn→m+∑m≠n′Γn′→m)+πℏ2(xnn−xn′n′)2SF(0)≡12(∑m≠nΓn→m+∑m≠n′Γn′→m)+kBTℏ2η(xnn−xn′n′)2, for n≠n′, where the low-frequency drag coefficient η is again defined as limω→0χ " (ω)/ω− see Eq. (138).

This result shows that two effects yield independent contributions to the dephasing. The first of them may be interpreted as a result of "virtual" transitions of the system, from the levels n and n ’ of our interest, to other energy levels m; according to Eq. (195), this contribution is proportional to the strength of coupling to the environment at relatively high frequencies ωnm and ωn′m. (If the energy quanta ℏω of these frequencies are much larger than the thermal fluctuation scale kBT, then only the lower levels, with Em<max[En,En] are important.) On the contrary, the second contribution is due to low-frequency, essentially classical fluctuations of the environment, and hence to the low-frequency dissipative susceptibility. In the Ohmic dissipation case, when the ratio η≡χ " (ω)/ω is frequencyindependent, both contributions are of the same order, but their exact relation depends on the matrix elements xnn ’ of a particular system.

For example, returning for a minute to the two-level system discussed in Sec. 3, described by our current theory with the replacement ˆx→ˆσz, the high-frequency contributions to dephasing vanish because of the absence of transitions between energy levels, while the low-frequency contribution yields 1T2≡1T12=kBTℏ2η(xnn−xn′n′)2→kBTℏ2η[(σz)11−(σz)22]2=4kBTℏ2η thus exactly reproducing the result (142) of the Heisenberg-Langevin approach. 68 Note also that the expression for T2 is very close in structure to Eq. (199) for T1 (in the high-temperature limit). However, for the simple interaction model (70) that was explored in Sec. 3, the off-diagonal elements of the operator ˆx=ˆσz in the stationary-state z-basis vanish, so that T1→∞, while T2 says finite. The physics of this result is very clear, for example, for the two-well implementation of the model (see Fig. 4 and its discussion): it is suitable for the case of a very high energy barrier between the wells, which inhibits tunneling, and hence any change of the well occupancies. However, T1 may become finite, and comparable with T2, if tunneling between the wells is substantial. 69

Because of the reason explained above, the derivation of Eqs. (200)-(204) is not valid for systems with equidistant energy spectra - for example, the harmonic oscillator. For this particular, but very important system, with its simple matrix elements xnn ’ given by Eqs. (5.92), it is longish but straightforward to repeat the above calculations, starting from (183), to obtain an equation similar in structure to Eq. (200), but with two other terms, proportional to wn±1,n′±1, on its right-hand side. Neglecting the minor Lamb-shift term, the equation reads ˙wnn′=−δ{[(ne+1)(n+n′)+ne(n+n′+2)]wnn′−2(ne+1)[(n+1)(n′+1)]1/2wn+1,n′+1−2ne(nn′)1/2wn−1,n′−1}. Here δ is the effective damping coefficient, 70 δ≡x202ℏImχ(ω0)≡Imχ(ω0)2mω0, equal to just η/2m for the Ohmic dissipation, and ne is the equilibrium number of oscillator’s excitations, given by Eq. (26b), with the environment’s temperature T. (I am using this new notation because in dynamics, the instant expectation value ⟨n⟩ may be time-dependent, and is generally different from its equilibrium value ne.)

As a remark: the derivation of Eq. (205) might be started at a bit earlier point, from the Markov approximation applied to Eq. (181), expressing the coordinate operator via the creation-annihilation operators (5.65). This procedure gives the result in the operator (i.e. basis-independent) form: 71 ˙ˆw=−δ[(ne+1)({ˆa†ˆa,ˆw}−2ˆaˆwˆa†)+ne({ˆaˆa†,ˆw}−2ˆa†ˆwˆa)]. In the Fock state basis, this equation immediately reduces to Eq. (205); however, Eq. (207) may be more convenient for some applications.

Returning to Eq. (205), we see that it relates only the elements wnn, located at the same distance (n−n′) from the principal diagonal of the density matrix. This means, in particular, that the dynamics of the diagonal elements wnn of the matrix, i.e. the Fock state probabilities Wn, is independent of the offdiagonal elements, and may be represented in the form (194), truncated to the transitions between the adjacent energy levels only (n′=n±1):

˙Wn=(Γn+1→nWn+1−Γn→n+1Wn)+(Γn−1→nWn−1−Γn→n−1Wn), with the following rates: Γn+1→n=2δ(n+1)(ne+1),Γn→n+1=2δ(n+1)ne,Γn−1→n=2δnne,Γn→n−1=2δn(ne+1). Since according to the definition of ne, given by Eq. (26b), ne=1exp{ℏω0/kBT}−1, so that ne+1=exp{ℏω0/kBT}exp{ℏω0/kBT}−1≡−1exp{−ℏω0/kBT}−1, taking into account Eqs. (5.92), (186), (206), and the asymmetry of the function χ " (ω), we see that these rates are again described by Eq. (196), even though the last formula was derived for non-equidistant energy spectra.

Hence the only substantial new feature of the master equation for the harmonic oscillator, is that the decay of the off-diagonal elements of its density matrix is scaled by the same parameter (2δ) as that of the decay of its diagonal elements, i.e. there is no radical difference between the dephasing and energy-relaxation times T2 and T1. This fact may be interpreted as the result of the independence of the energy level distances, ℏω0, of the fluctuations F(t) exerted on the oscillator by the environment, so that their low-frequency density, SF(0), does not contribute to the dephasing. (This fact formally follows also from Eq. (203) as well, taking into account that for the oscillator, xnn=xn′n′=0.)

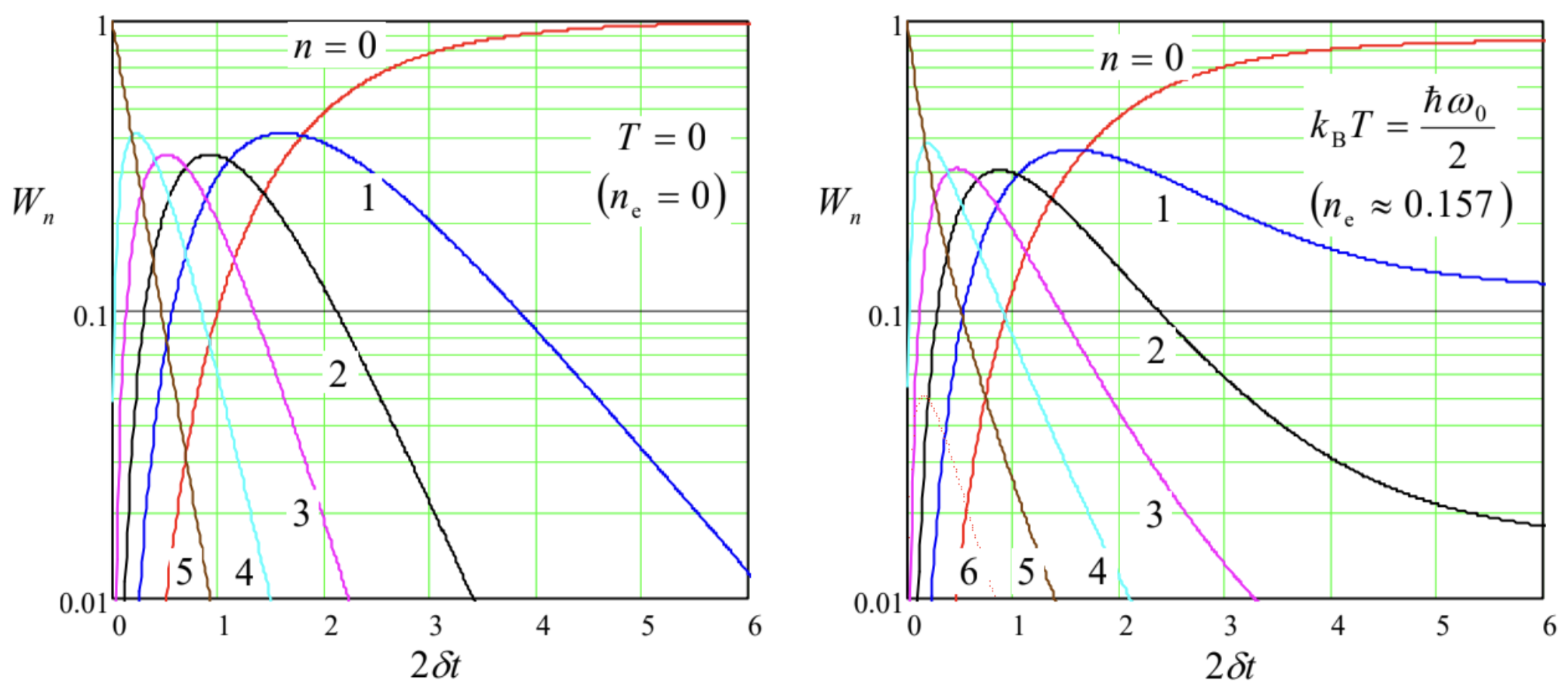

The simple equidistant structure of the oscillator’s spectrum makes it possible to readily solve the system of Eqs. (208), with n=0,1,2,…, for some important cases. In particular, if the initial state of the oscillator is a classical mixture, with no off-diagonal elements, its further relaxation proceeds as such a mixture: wnn⋅(t)=0 for all n′≠n.72 In particular, it is straightforward to use Eq. (208) to verify that if the initial classical mixture obeys the Gibbs distribution ( 25 ), but with a temperature Ti different from that of the environment (Te), then the relaxation process is reduced to a simple exponential transient of the effective temperature from Ti to Te : Wn(t)=exp{−nℏω0kBTef(t)}(1−exp{−ℏω0kBTef(t)}), with Tef(t)=Tie−2δt+Te(1−e−2δt), with the corresponding evolution of the expectation value of the full energy E−cf. Eq. (26b): ⟨E⟩(t)=ℏω02+ℏω0⟨n⟩(t),⟨n⟩(t)=1exp{ℏω0/kBTef(t)}−1→t→∞ne. However, if the initial state of the oscillator is different (say, corresponds to some upper Fock state), the relaxation process, described by Eqs. (208)-(209), is more complex - see, e.g., Fig. 8. At low temperatures (Fig. 8a), it may be interpreted as a gradual "roll" of the probability distribution down the energy staircase, with a gradually decreasing velocity dn/dt∝n. However, at substantial temperatures, with kBT∼ℏω0 (Fig. 8 b ), this "roll-down" is saturated when the level occupancies Wn(t) approach their equilibrium values (25)⋅73

The analysis of this process may be simplified in the case when W(n,t)≡Wn(t) is a smooth function of the energy level number n, limited to high levels: n≫>1. In this limit, we may use the Taylor expansion of this function (written for the points Δn=±1 ), truncated to three leading terms: Wn±1(t)≡W(n±1,t)≈W(n,t)±∂W(n,t)∂n+12∂2W(n,t)∂n2. Plugging this expression into Eqs. (208)-(209), we get for the function W(n,t) a partial differential equation, which may be recast in the following form: ∂W∂t=−∂∂n[f(n)W]+∂2∂n2[d(n)W], with f(n)≡2δ(ne−n),d(n)≡2δ(ne+1/2)n. Since at n≫>1, the oscillator’s energy E is close to ℏω0n, this energy diffusion equation (sometimes incorrectly called the Fokker-Planck equation - see below) essentially describes the time evolution of the continuous probability density w(E,t), which may be defined as w(E,t)≡W(E/ℏω0,t)/ℏω0.74

This continuous approximation naturally reminds us of the need to discuss dissipative systems with a continuous spectrum. Unfortunately, for such systems the few (relatively :-) simple results that may be obtained from the basic Eq. (181), are essentially classical in nature and are discussed in detail in the SM part of this series. Here, I will give only a simple illustration. Let us consider a 1D particle that interacts weakly with a thermally-equilibrium environment, but otherwise is free to move along the x-axis. As we know from Chapters 2 and 5 , in this case, the most convenient basis is that of the momentum eigenstates p. In the momentum representation, the density matrix is just the c-number function w(p,p′), defined by Eq. (54), which was already discussed in brief in Sec. 2 . On the other hand, the coordinate operator, which participates in the right-hand side of Eq. (181), has the form given by the first of Eqs. (4.269), ˆx=iℏ∂∂p, dual to the coordinate-representation formula (4.268). As we already know, such operators are local see, e.g., Eq. (4.244). Due to this locality, the whole right-hand side of Eq. (181) is local as well, and hence (within the framework of our perturbative treatment) the interaction with the environment affects only the diagonal values w(p,p) of the density matrix, i.e. the momentum probability density w(p).

Let us find the equation governing the evolution of this function in time in the Markov approximation, when the time scale of the density matrix evolution is much longer than the correlation time τc of the environment, i.e. the time scale of the functions KF(τ) and G(τ). In this approximation, we may take the matrix elements out of the first integral of Eq. (181), −1ℏ2∫t−∞KF(t−t′)dt′[ˆx(t),[ˆx(t′),ˆw(t′)]]≈−1ℏ2∫∞0KF(τ)dτ[ˆx,[ˆx,ˆw]]=−πℏ2SF(0)[ˆx,[ˆx,ˆw]]=−kBTℏ2η[ˆx,[ˆx,ˆw]], and calculate the last double commutator in the Schrödinger picture. This may be done either using an explicit expression for the matrix elements of the coordinate operator or in a simpler way - using the same trick as at the derivation of the Ehrenfest theorem in Sec. 5.2. Namely, expanding an arbitrary function f(p) into the Taylor series in p, f(p)=∞∑k=01k!∂kf∂pkpk, and using Eq. (215), we can prove the following simple commutation relation [ˆx,f]=∞∑k=01k!∂kf∂pk[ˆx,pk]=∞∑k=01k!∂kf∂pk(iℏkpk−1)=iℏ∞∑k=11(k−1)!∂k−1∂pk−1(∂f∂p)pk−1=iℏ∂f∂p. Now applying this result sequentially, first to w and then to the resulting commutator, we get [ˆx,[ˆx,w]]=[ˆx,iℏ∂w∂p]=iℏ∂∂p(iℏ∂w∂p)=−ℏ2∂2w∂p2. It may look like the second integral in Eq. (181) might be simplified similarly. However, it vanishes at p′→p, and t′→t, so that to calculate the first non-vanishing contribution from that integral for p=p′, we have to take into account the small difference τ≡t−t′∼τc between the arguments of the coordinate operators under that integral. This may be done using Eq. (169) with the free-particle’s Hamiltonian consisting of the kinetic-energy contribution alone: ˆx(t′)−ˆx(t)≈−τ˙ˆx=−τ1iℏ[ˆx,ˆHs]=−τ1iℏ[ˆx,ˆp22m]=−τˆpm, where the exact argument of the operator on the right-hand side is already unimportant and may be taken for t. As a result, we may use the last of Eqs. (136) to reduce the second term on the right-hand side of Eq. (181) to −i2ℏ∫t−∞G(t−t′)[ˆx(t),{ˆx(t′),ˆw(t′)}]dt′≈i2ℏ∫∞0G(τ)τdτ[ˆx,{ˆpm,ˆw}]=η2iℏ[ˆx,{ˆpm,ˆw}]. In the momentum representation, the momentum operator and the density matrix w are just c-numbers and commute, so that, applying Eq. (218) to the product pw, we get [ˆx,{ˆpm,ˆw}]=[ˆx,2pmw]=2iℏ∂∂p(pmw), and may finally reduce the integro-differential equation Eq. (181) to a partial differential equation: ∂w∂t=−∂∂p(Fw)+ηkBT∂2w∂p2, with F≡−ηpm. This is the 1D form of the famous Fokker-Planck equation describing the classical statistics of motion of a particle (in our particular case, of a free particle) in an environment providing a linear drag characterized by the coefficient η; it belongs to the same drift-diffusion type as Eq. (214). The first, drift term on its right-hand side describes the particle’s deceleration due to the drag force (137),F=−ηp/m= −ηv, provided by the environment. The second, diffusion term on the right-hand side of Eq. (223) describes the effect of fluctuations: the particle’s momentum’ random walk around its average (driftaffected, and hence time-dependent) value. The walk obeys the law similar to Eq. (85), but with the momentum-space diffusion coefficient Dp=ηkBT This is the reciprocal-space version of the fundamental Einstein relation between the dissipation (friction) and fluctuations, in this classical limit represented by their thermal energy scale kBT.75

Just for the reader’s reference, let me note that the Fokker-Planck equation (223) may be readily generalized to the 3D motion of a particle under the effect of an additional external force, 76 and in this more general form is the basis for many important applications; however, due to its classical character, its discussion is also left for the SM part of this series. 77

To summarize our discussion of the two alternative approaches to the analysis of quantum systems interacting with a thermally-equilibrium environment, described in the last three sections, let me emphasize again that they give different descriptions of the same phenomena, and are characterized by the same two functions G(τ) and KF(τ). Namely, in the Heisenberg-Langevin approach, we describe the system by operators that change (fluctuate) in time, even in the thermal equilibrium, while in the density-matrix approach, the system is described by non-fluctuating probability functions, such as Wn(t) or w(p,t), which are stationary in equilibrium. In the cases when a problem may be solved analytically to the end by both methods (for example, for a harmonic oscillator), they give identical results.

54 As in Sec. 4, the reader not interested in the derivation of the basic equation (181) of the density matrix evolution may immediately jump to the discussion of this equation and its applications.

55 A similar analysis of a more general case, when the interaction with the environment has to be represented as a sum of products of the type (90), may be found, for example, in the monograph by K. Blum, Density Matrix Theory and Applications, 3rd ed., Springer, 2012.

56 If we used either the Schrödinger or the Heisenberg picture instead, the forthcoming Eq. (175) would pick up a rather annoying multitude of fast-oscillating exponents, of different time arguments, on its right-hand side.

57 For the notation simplicity, the fact that here (and in all following formulas) the density operator ˆw of the system s of our interest is taken in the interaction picture, is just implied.

58 Named after Andrey Andreyevich Markov (1856-1922; in older Western literature, "Markoff"), a mathematician famous for his general theory of the so-called Markov processes, whose future development is completely determined by its present state, but not its pre-history.

59 Here, rather reluctantly, I will use this standard notation, En, for the eigenenergies of our system of interest (s), in hope that the reader would not confuse these discrete energy levels with the quasi-continuous energy levels of its environment (e), participating in particular in Eqs. (108) and (111). As a reminder, by this stage of our calculations, the environment levels have disappeared from our formulas, leaving behind their functionals KF(τ) and G(τ).

60This is essentially the same rotating-wave approximation (RWA) as was used in Sec. 6.5.

61 As was already discussed in Sec. 4 , the lower-limit substitution (t′=−∞) in the integrals participating in Eq. (183) gives zero, due to the finite-time "memory" of the system, expressed by the decay of the correlation and response functions at large values of the time delay τ=t−t ’.

62 The master equations, first introduced to quantum mechanics in 1928 by W. Pauli, are sometimes called the "Pauli master equations", or "kinetic equations", or "rate equations".

63 As Eq. (193) shows, the term with m=n would vanish and thus may be legitimately excluded from the sum.

64 As Eq. (193) shows, the result for Γn→n′ is described by Eq. (195) as well, provided that the indices n and n ’ are swapped in all components of its right-hand side, including the swap ωnn′→ωn′n=−ωnn.

65 It is straightforward (and highly recommended to the reader) to show that at low temperatures (kBT<<∣En′− En∣ ), Eq. (196) gives the same result as the Golden Rate formula (6.111), with A=x. (The low-temperature condition ensures that the initial occupancy of the excited level n is negligible, as was assumed at the derivation of Eq. (6.111).)

66 Sometimes Eq. (200) (in any of its numerous alternative forms) is called the Redfield equation, after the 1965 work by A. Redfield. Note, however, that in the mid-1960s several other authors, notably including (in the alphabetical order) H. Haken, W. Lamb, M. Lax, W. Louisell, and M. Scully, also made major contributions to the very fast development of the density-matrix approach to open quantum systems.

67 Such corrections are sometimes called Lamb shifts, due to their conceptual similarity to the genuine Lamb shift - the effect first observed experimentally in 1947 by Willis Lamb and Robert Retherford: a minor difference between energy levels of the 2s and 2p states of hydrogen, due to the electric-dipole coupling of hydrogen atoms to the free-space electromagnetic environment. (These energies are equal not only in the non-relativistic theory described in Sec. 3.6 but also in the relativistic theory (see Secs. 6.3, 9.7), if the electromagnetic environment is ignored.) The explanation of the Lamb shift by H. Bethe, in the same 1947, essentially launched the whole field of quantum electrodynamics - to be briefly discussed in Chapter 9.

68 The first form of Eq. (203), as well as the analysis of Sec. 3, implies that low-frequency fluctuations of any other origin, not taken into account in own current analysis (say, an unintentional noise from experimental equipment), may also contribute to dephasing; such "technical fluctuations" are indeed a very serious challenge for the experimental implementation of coherent qubit systems - see Sec. 8.5 below.

69 As was discussed in Sec. 5.1, the tunneling may be described by using, instead of Eq. (70), the full two-level Hamiltonian (5.3). Let me leave for the reader’s exercise to spell out the equations for the time evolution of the density matrix elements of this system, and of the expectation values of the Pauli operators, for this case.

70 This coefficient participates prominently in the classical theory of damped oscillations (see, e.g., CM Sec. 5.1), in particular defining the oscillator’s Q-factor as Q \equiv \omega_{0} / 2 \delta, and the decay time of the amplitude A and the energy E of free oscillations: A(t)=A(0) \exp \{-\delta t\}, E(t)=E(0) \exp \{-2 \delta t\}.

{ }^{71} Sometimes Eq. (207) is called the Lindblad equation, but I believe this terminology is inappropriate. It is true that its structure falls into a general category of equations, suggested by G. Lindblad in 1976 for the density operators in the Markov approximation, whose diagonalized form in the interaction picture is \dot{\hat{w}}=\sum_{j} \gamma_{j}\left(2 \hat{L}_{j} \hat{w} \hat{L}_{j}^{\dagger}-\left\{\hat{L}_{j} \hat{L}_{j}^{\dagger}, \hat{w}\right\}\right) . However, Eq. (207) was derived much earlier (by L. Landau in 1927 for zero temperature, and by M. Lax in 1960 for an arbitrary temperature), and in contrast to the general Lindblad equation, spells out the participating operators \hat{L}_{j} and coefficients \gamma_{j} for a particular physical system - the harmonic oscillator.

{ }^{72} Note, however, that this is not true for many applications, in which a damped oscillator is also under the effect of an external time-dependent field, which may be described by additional, typically off-diagonal terms on the right-hand side of Eqs. (205).

{ }^{73} The reader may like to have a look at the results of nice measurements of such functions W_{n}(t) in microwave oscillators, performed using their coupling with Josephson-junction circuits: H. Wang et al., Phys. Rev. Lett. 101, 240401 (2008), and with Rydberg atoms: M. Brune et al., Phys. Rev. Lett. 101, 240402 (2008).

{ }^{74} In the classical limit n_{\mathrm{e}}>>1, Eq. (214) is analytically solvable for any initial conditions - see, e.g., the paper by B. Zeldovich et al., Sov. Phys. JETP 28, 308 (1969), which also gives some more intricate solutions of Eqs. (208)-(209). Note, however, that the most important properties of the damped harmonic oscillator (including its relaxation dynamics) may be analyzed simpler by using the Heisenberg-Langevin approach discussed in the previous section.

{ }^{75} Note that Eq. (224), as well as the original Einstein’s relation between the diffusion coefficient D in the direct space and temperature, may be derived much simpler by other means - for example, from the Nyquist formula (139). These issues are discussed in detail in SM Chapter 5 .

{ }^{76} Moreover, Eq. (223) may be generalized to the motion of a quantum particle in an additional periodic potential U(\mathbf{r}). In this case, due to the band structure of the energy spectrum (which was discussed in Secs. 2.7 and 3.4), the coupling to the environment produces not only a continuous drift-diffusion of the probability density in the space of the quasimomentum \hbar \mathbf{q} but also quantum transitions between different energy bands at the same \hbar \mathbf{q}- see, e.g., K. Likharev and A. Zorin, J. Low Temp. Phys. 59, 347 (1985).

{ }^{77} See SM Secs. 5.6-5.7. For a more detailed discussion of quantum effects in dissipative systems with continuous spectra see, e. g., either U. Weiss, Quantum Dissipative Systems, 2^{\text {nd }} ed., World Scientific, 1999, or H.-P. Breuer and F. Petruccione, The Theory of Open Quantum Systems, Oxford U. Press, 2007 .